Researchers have achieved a major leap in quantum computing by simulating Google’s 53-qubit Sycamore circuit using over 1,400 GPUs and groundbreaking algorithmic techniques.

Their efficient tensor network methods and clever “top-k” sampling approach drastically reduce the memory and computational load needed for accurate simulations. These strategies were validated with smaller test circuits and could shape the future of quantum research, pushing the boundaries of what classical systems can simulate.

Simulating Google’s Quantum Circuit

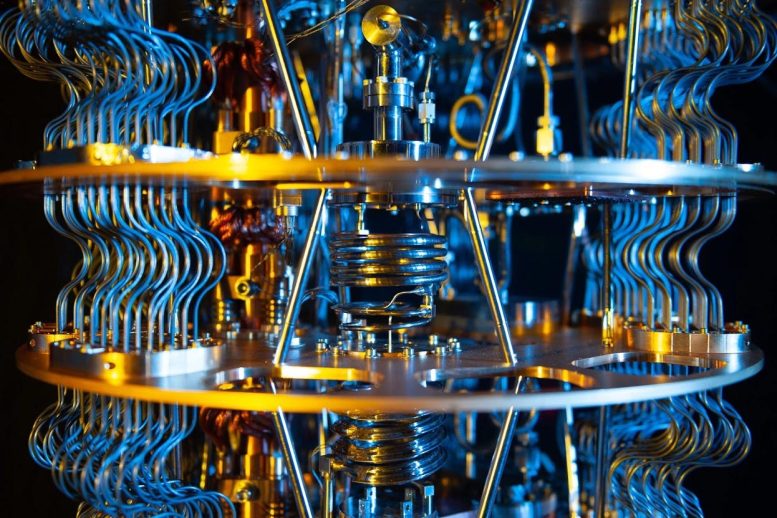

A team of researchers has reached a major milestone in quantum computing by successfully simulating Google’s 53-qubit, 20-layer Sycamore quantum circuit. This was accomplished using 1,432 NVIDIA A100 GPUs and highly optimized parallel algorithms, opening new doors for simulating quantum systems on classical hardware.

Innovations in Tensor Network Algorithms

At the core of this achievement are advanced tensor network contraction techniques, which efficiently estimate the output probabilities of quantum circuits. To make the simulation feasible, the researchers used slicing strategies to break the full tensor network into smaller, more manageable parts. This significantly reduced memory demands while preserving computational efficiency — making it possible to simulate large quantum circuits with comparatively modest resources.

The team also used a “top-k” sampling method, which selects the most probable bitstrings from the simulation output. By focusing only on these high-probability results, they improved the linear cross-entropy benchmark (XEB) — a key measure of how closely the simulation matches expected quantum behavior. This not only boosted simulation accuracy but also reduced the computational load, making the process faster and more scalable.

Validating with Smaller Circuits

To validate their algorithm, the researchers conducted numerical experiments with smaller-scale random circuits, including a 30-qubit, 14-layer gate circuit. The results demonstrated excellent agreement with theoretically predicted XEB values for various tensor contraction sub-network sizes. The top-k method’s enhancement of the XEB value closely aligned with theoretical predictions, affirming the accuracy and efficiency of the algorithm.

Streamlining Tensor Contraction Performance

The study also highlighted strategies for optimizing tensor contraction resource requirements. By refining the order of tensor indices and minimizing inter-GPU communication, the team achieved notable improvements in computational efficiency. This strategy also demonstrates, based on complexity estimates, that increasing memory capacity — such as 80GB, 640GB, and 5120GB — can significantly reduce computational time complexity. The use of 8×80 GB memory configurations per computational node enabled high-performance computing.

Future of Quantum Simulations

This breakthrough not only establishes a new benchmark for classical simulations of multi-qubit quantum computers but also introduces innovative tools and methodologies for future quantum computing research. By continuing to refine algorithms and optimize computational resources, the researchers anticipate making substantial progress in simulating larger quantum circuits with more qubits. This work represents a significant advancement in quantum computing, offering valuable insights for the ongoing development of quantum technologies.

Reference: “Leapfrogging Sycamore: harnessing 1432 GPUs for 7× faster quantum random circuit sampling” by Xian-He Zhao, Han-Sen Zhong, Feng Pan, Zi-Han Chen, Rong Fu, Zhongling Su, Xiaotong Xie, Chaoxing Zhao, Pan Zhang, Wanli Ouyang, Chao-Yang Lu, Jian-Wei Pan and Ming-Cheng Chen, 12 September 2024, National Science Review.

DOI: 10.1093/nsr/nwae317

Never miss a breakthrough: Join the SciTechDaily newsletter.

1 Comment

thank you for this