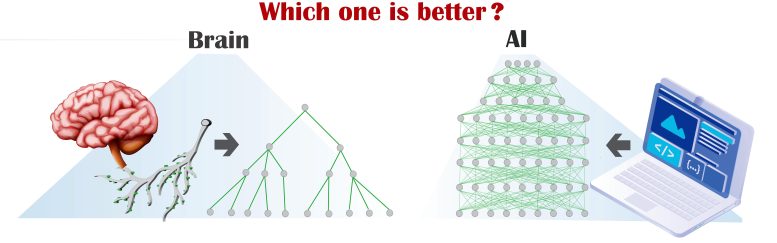

Can the brain, limited in its ability to perform precise math, compete with AI systems run on high-speed parallel computers? Yes, for many tasks, as evidenced by everyday experiences. Given this, can a more efficient AI be built based on the brain’s design?

Although the brain’s architecture is very shallow, brain-inspired artificial neural networks’ learning capabilities can outperform deep learning.

Traditionally, artificial intelligence stems from human brain dynamics. However, brain learning is restricted in a number of significant aspects compared to deep learning (DL). First, efficient DL wiring structures (architectures) consist of many tens of feedforward (consecutive) layers, whereas brain dynamics consist of only a few feedforward layers. Second, DL architectures typically consist of many consecutive filter layers, which are essential to identify one of the input classes. If the input is a car, for example, the first filter identifies wheels, the second one identifies doors, the third one lights and after many additional filters it becomes clear that the input object is, indeed, a car. Conversely, brain dynamics contain just a single filter located close to the retina. The last necessary component is the mathematical complex DL training procedure, which is evidently far beyond biological realization.

Scheme of a simple neural network based on dendritic tree (left) vs. a complex artificial intelligence deep learning architecture (right). Credit: Prof. Ido Kanter, Bar-Ilan University

Can the brain, with its limited realization of precise mathematical operations, compete with advanced artificial intelligence systems implemented on fast and parallel computers? From our daily experience we know that for many tasks the answer is yes! Why is this and, given this affirmative answer, can one build a new type of efficient artificial intelligence inspired by the brain? In an article published today (January 30) in the journal Scientific Reports, researchers from Bar-Ilan University in Israel solve this puzzle.

“We’ve shown that efficient learning on an artificial tree architecture, where each weight has a single route to an output unit, can achieve better classification success rates than previously achieved by DL architectures consisting of more layers and filters. This finding paves the way for efficient, biologically-inspired new AI hardware and algorithms,” said Prof. Ido Kanter, of Bar-Ilan’s Department of Physics and Gonda (Goldschmied) Multidisciplinary Brain Research Center, who led the research.

“Highly pruned tree architectures represent a step toward a plausible biological realization of efficient dendritic tree learning by a single or several neurons, with reduced complexity and energy consumption, and biological realization of backpropagation mechanism, which is currently the central technique in AI,” added Yuval Meir, a PhD student and contributor to this work.

Efficient dendritic tree learning is based on previous research by Kanter and his experimental research team — and conducted by Dr. Roni Vardi — indicating evidence for sub-dendritic adaptation using neuronal cultures, together with other anisotropic properties of neurons, like different spike waveforms, refractory periods and maximal transmission rates.

The efficient implementation of highly pruned tree training requires a new type of hardware that differs from emerging GPUs that are better fitted to the current DL strategy. The emergence of new hardware is required to efficiently imitate brain dynamics.

Reference: “Learning on tree architectures outperforms a convolutional feedforward network” by Yuval Meir, Itamar Ben-Noam, Yarden Tzach, Shiri Hodassman and Ido Kanter, 30 January 2023, Scientific Reports.

DOI: 10.1038/s41598-023-27986-6

It’s becoming clear that with all the brain and consciousness theories out there, the proof will be in the pudding. By this I mean, can any particular theory be used to create a human adult level conscious machine. My bet is on the late Gerald Edelman’s Extended Theory of Neuronal Group Selection. The lead group in robotics based on this theory is the Neurorobotics Lab at UC at Irvine. Dr. Edelman distinguished between primary consciousness, which came first in evolution, and that humans share with other conscious animals, and higher order consciousness, which came to only humans with the acquisition of language. A machine with primary consciousness will probably have to come first.

What I find special about the TNGS is the Darwin series of automata created at the Neurosciences Institute by Dr. Edelman and his colleagues in the 1990’s and 2000’s. These machines perform in the real world, not in a restricted simulated world, and display convincing physical behavior indicative of higher psychological functions necessary for consciousness, such as perceptual categorization, memory, and learning. They are based on realistic models of the parts of the biological brain that the theory claims subserve these functions. The extended TNGS allows for the emergence of consciousness based only on further evolutionary development of the brain areas responsible for these functions, in a parsimonious way. No other research I’ve encountered is anywhere near as convincing.

I post because on almost every video and article about the brain and consciousness that I encounter, the attitude seems to be that we still know next to nothing about how the brain and consciousness work; that there’s lots of data but no unifying theory. I believe the extended TNGS is that theory. My motivation is to keep that theory in front of the public. And obviously, I consider it the route to a truly conscious machine, primary and higher-order.

My advice to people who want to create a conscious machine is to seriously ground themselves in the extended TNGS and the Darwin automata first, and proceed from there, by applying to Jeff Krichmar’s lab at UC Irvine, possibly. Dr. Edelman’s roadmap to a conscious machine is at https://arxiv.org/abs/2105.10461