Services often mischaracterize trans and non-binary individuals.

With a brief glance at a single face, emerging facial recognition software can now categorize the gender of many men and women with remarkable accuracy. But if that face belongs to a transgender person, such systems get it wrong more than one third of the time, according to new CU Boulder research.

“We found that facial analysis services performed consistently worse on transgender individuals, and were universally unable to classify non-binary genders,” said lead author Morgan Klaus Scheuerman, a Ph.D. student in the Information Science department. “While there are many different types of people out there, these systems have an extremely limited view of what gender looks like.”

The study comes at a time when facial analysis technologies—which use hidden cameras to assess and characterize certain features about an individual—are becoming increasingly prevalent, embedded in everything from smartphone dating apps and digital kiosks at malls to airport security and law enforcement surveillance systems.

Previous research suggests they tend to be most accurate when assessing the gender of white men, but misidentify women of color as much as one-third of the time.

“We knew there were inherent biases in these systems around race and ethnicity and we suspected there would also be problems around gender,” said senior author Jed Brubaker, an assistant professor of Information Science. “We set out to test this in the real world.”

For some gender identities, accuracy is impossible

Researchers collected 2,450 images of faces from Instagram, each of which had been labeled by its owner with a hashtag indicating their gender identity. The pictures were then divided into seven groups of 350 images (#women, #man, #transwoman, #transman, #agender, #agenderqueer, #nonbinary) and analyzed by four of the largest providers of facial analysis services (IBM, Amazon, Microsoft and Clarifai).

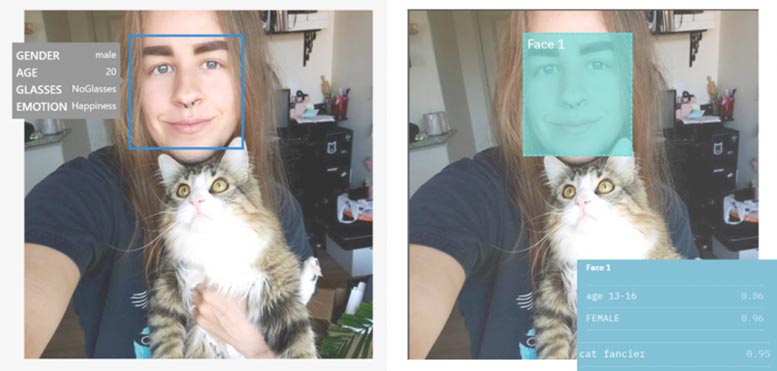

When researcher Morgan Klaus Scheuerman, who is a man, submitted his photo to several facial analysis services, half got his gender wrong. Credit: Morgan Klaus Scheuerman/CU Boulder

Notably, Google was not included because it does not offer gender recognition services.

On average, the systems were most accurate with photos of cisgender women (those born female and identifying as female), getting their gender right 98.3% of the time. They categorized cisgender men accurately 97.6% of the time.

But trans men were wrongly identified as women up to 38% of the time. And those who identified as agender, genderqueer or nonbinary—indicating that they identify as neither male or female—were mischaracterized 100% of the time.

“These systems don’t know any other language but male or female, so for many gender identities it is not possible for them to be correct,” said Brubaker.

Outdated stereotypes persist

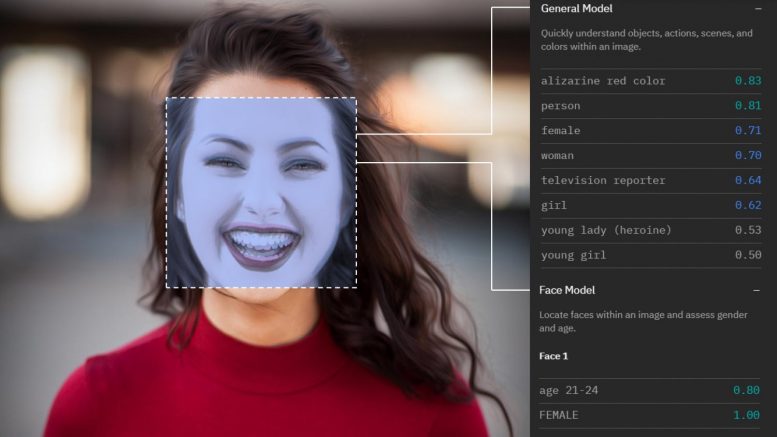

The study also suggests that such services identify gender based on outdated stereotypes.

When Scheuerman, who is male and has long hair, submitted his own picture, half categorized him as female.

The researchers could not get access to the training data, or image inputs used to “teach” the system what male and female looks like, but previous research suggests they assess things like eye position, lip fullness, hair length and even clothing.

“These systems run the risk of reinforcing stereotypes of what you should look like if you want to be recognized as a man or a woman. And that impacts everyone,” said Scheuerman.

The market for facial recognition services is projected to double by 2024, as tech developers work to improve human-robot interaction and more carefully target ads to shoppers.

“They want to figure out what your gender is, so they can sell you something more appropriate for your gender,” explains Scheuerman, pointing to one highly publicized incident of a mall in Canada which used a hidden camera in a kiosk to do this.

Already, Brubaker noted, people engage with facial recognition technology every day to gain access to their smartphones or log into their computers. If it has a tendency to misgender certain populations that are already vulnerable, that could have grave consequences.

For instance, a match-making app could set someone up on a date with the wrong gender, leading to a potentially dangerous situation. Or a mismatch between the gender a facial recognition program sees and the documentation a person carries could lead to problems getting through airport security, said Scheuerman. He is most concerned that such systems reaffirm notions that transgender people don’t fit in.

“People think of computer vision as futuristic, but there are lots of people who could be left out of this so-called future,” he said.

Two facial analysis systems identified the gender of researcher Morgan Klaus Scheuerman, who is a man, differently.

The authors say they’d like to see tech companies move away from gender classification entirely and stick to more specific labels like “long hair” or “make-up” when assessing images.

“When you walk down the street you might look at someone and presume that you know what their gender is, but that is a really quaint idea from the ‘90s and it is not what the world is like anymore,” said Brubaker. “As our vision and our cultural understanding of what gender is has evolved, the algorithms driving our technological future have not. That’s deeply problematic.”

###

Reference: “How Computers See Gender: An Evaluation of Gender Classification in Commercial Facial Analysis Services” by Morgan Klaus Scheuerman, Jacob M. Paul and Jed R. Brubaker, 7 November 2019, ACM Digital Library, Volume 3 Issue CSCW, November 2019, Article No. 144.

DOI: 10.1145/3359246

The research will be presented in November at the ACM Conference on Computer-Supported Cooperative Work in Austin, Texas.

THis is so obvious it is not even worth an article. A machine trained to recognize a biological gender will be confused when that gender has been altered, “artificially.” The biological face is difficult to alter. As for people without a distinct biological gender the machine reflects that. Why is this surprising?