They discovered that the AI system they looked at expresses word meanings in a manner that closely resembles human judgment.

Models for natural language processing use statistics to collect a wealth of information about word meanings.

In “Through the Looking Glass,” Humpty Dumpty says scornfully, “When I use a word, it means just what I choose it to mean — neither more nor less.” Alice replies, “The question is whether you can make words mean so many different things.”

Word meanings have long been the subject of research. To comprehend their meaning, the human mind must sort through a complex network of flexible, detailed information.

Now, a more recent issue with word meaning has come to light. Researchers are looking at whether machines with artificial intelligence would be able to mimic human thought processes and comprehend words similarly. Researchers from UCLA, MIT, and the National Institutes of Health have just published a study that answers that question.

The study, which was published in the journal Nature Human Behaviour, demonstrates that artificial intelligence systems may really pick up on highly complex word meanings. The researchers also found a simple method for gaining access to this sophisticated information. They discovered that the AI system they looked at represents word meanings in a manner that closely resembles human judgment.

The AI system explored by the authors has been widely utilized to analyze word meaning throughout the last decade. It picks up word meanings by “reading” enormous quantities of material on the internet, which contains tens of billions of words.

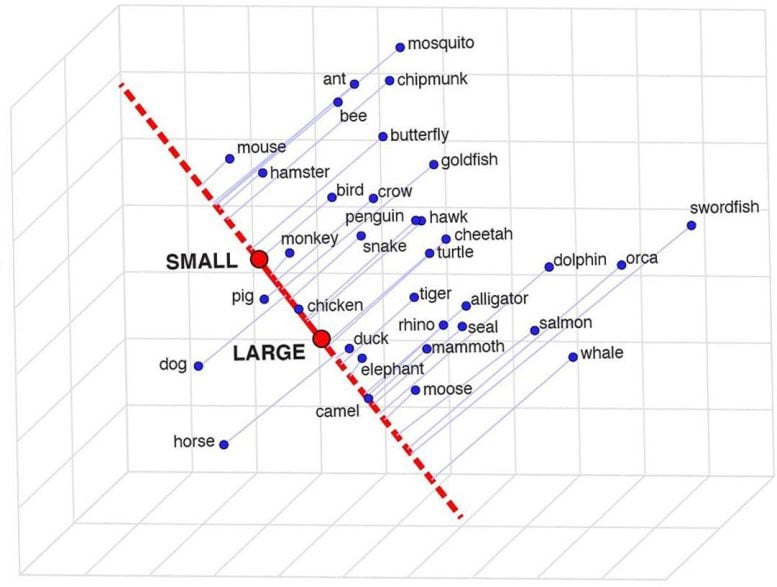

A depiction of semantic projection, which can determine the similarity between two words in a specific context. This grid shows how similar certain animals are based on their size. Credit: Idan Blank/UCLA

When words frequently occur together — “table” and “chair,” for example — the system learns that their meanings are related. And if pairs of words occur together very rarely — like “table” and “planet,” — it learns that they have very different meanings.

That approach seems like a logical starting point, but consider how well humans would understand the world if the only way to understand meaning was to count how often words occur near each other, without any ability to interact with other people and our environment.

Idan Blank, a UCLA assistant professor of psychology and linguistics, and the study’s co-lead author, said the researchers set out to learn what the system knows about the words it learns, and what kind of “common sense” it has.

Before the research began, Blank said, the system appeared to have one major limitation: “As far as the system is concerned, every two words have only one numerical value that represents how similar they are.”

In contrast, human knowledge is much more detailed and complex.

“Consider our knowledge of dolphins and alligators,” Blank said. “When we compare the two on a scale of size, from ‘small’ to ‘big,’ they are relatively similar. In terms of their intelligence, they are somewhat different. In terms of the danger they pose to us, on a scale from ‘safe’ to ‘dangerous,’ they differ greatly. So a word’s meaning depends on context.

“We wanted to ask whether this system actually knows these subtle differences — whether its idea of similarity is flexible in the same way it is for humans.”

To find out, the authors developed a technique they call “semantic projection.” One can draw a line between the model’s representations of the words “big” and “small,” for example, and see where the representations of different animals fall on that line.

Using that method, the scientists studied 52-word groups to see whether the system could learn to sort meanings — like judging animals by either their size or how dangerous they are to humans, or classifying U.S. states by weather or by overall wealth.

Among the other word groupings were terms related to clothing, professions, sports, mythological creatures, and first names. Each category was assigned multiple contexts or dimensions — size, danger, intelligence, age, and speed, for example.

The researchers found that, across those many objects and contexts, their method proved very similar to human intuition. (To make that comparison, the researchers also asked cohorts of 25 people each to make similar assessments about each of the 52-word groups.)

Remarkably, the system learned to perceive that the names “Betty” and “George” are similar in terms of being relatively “old,” but that they represented different genders. And that “weightlifting” and “fencing” are similar in that both typically take place indoors, but different in terms of how much intelligence they require.

“It is such a beautifully simple method and completely intuitive,” Blank said. “The line between ‘big’ and ‘small’ is like a mental scale, and we put animals on that scale.”

Blank said he actually didn’t expect the technique to work but was delighted when it did.

“It turns out that this machine learning system is much smarter than we thought; it contains very complex forms of knowledge, and this knowledge is organized in a very intuitive structure,” he said. “Just by keeping track of which words co-occur with one another in language, you can learn a lot about the world.”

Reference: “Semantic projection recovers rich human knowledge of multiple object features from word embeddings” by Gabriel Grand, Idan Asher Blank, Francisco Pereira, and Evelina Fedorenko, 14 April 2022, Nature Human Behaviour.

DOI: 10.1038/s41562-022-01316-8

The study was funded by the Office of the Director of National Intelligence, Intelligence Advanced Research Projects Activity through the Air Force Research Laboratory.

For something to truly be ‘understood,’ requires that the understanding be by a sentient entity. When talking about a computer, or the algorithm it is executing, it is more accurate to say that the computer can process “complex word meanings,” as though it understood them. Nobody has made the case yet that computer algorithms are sentient. Making such a claim recently cost a computer engineer his job.