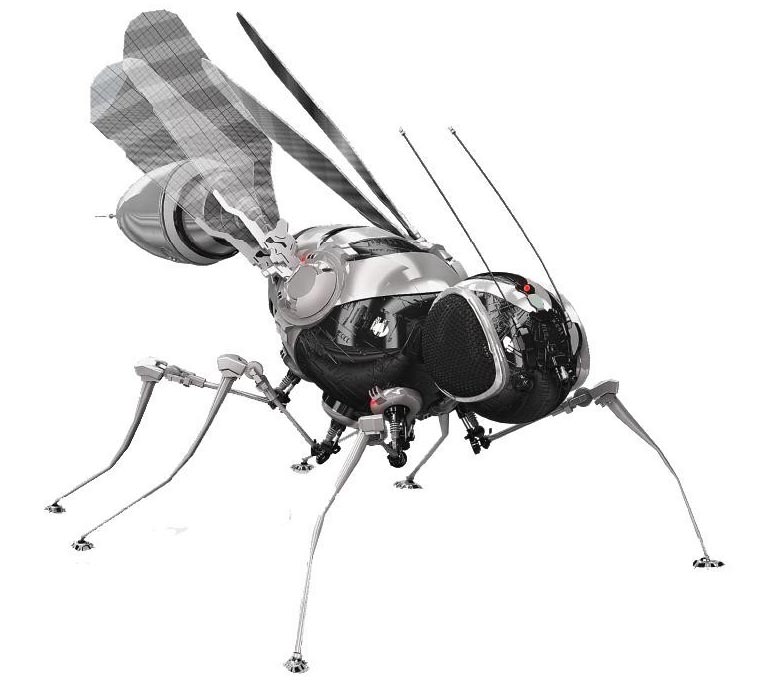

NeuroMechFly, the first accurate “digital twin” of the fly Drosophila melanogaster, offers a highly valuable testbed for studies that advance biomechanics and biorobotics. This could help pave the way for fly-like robots, such as the one illustrated here. Credit: EPFL

A Digital Twin of Drosophila

“We used two kinds of data to build NeuroMechFly,” says Professor Pavan Ramdya at the School of Life Sciences at Ecole Polytechnique Fédérale de Lausanne (EPFL). “First, we took a real fly and performed a CT scan to build a morphologically realistic biomechanical model. The second source of data was the real limb movements of the fly, obtained using pose estimation software that we’ve developed in the last couple of years that allow us to precisely track the movements of the animal.”

Ramdya’s group, working with the group of Professor Auke Ijspeert at EPFL’s Biorobotics Laboratory, is publishing a paper today (May 11, 2022) in the journal Nature Methods showcasing the first ever accurate “digital twin” of the fly Drosophila melanogaster, dubbed “NeuroMechFly.”

Time flies

Drosophila is the most commonly used insect in the life sciences and a long-term focus of Ramdya’s own research, who has been working on digitally tracking and modeling this animal for years. In 2019, his group published DeepFly3D, a deep-learning-based motion-capture software that uses multiple camera views to quantify the movements of Drosophila in three-dimensional space.

Continuing with deep-learning, in 2021 Ramdya’s team published LiftPose3D, a method for reconstructing 3D animal poses from 2D images taken from a single camera. These kinds of breakthroughs have provided the exploding fields of neuroscience and animal-inspired robotics with tools whose usefulness cannot be overstated.

In many ways, NeuroMechFly represents a culmination of all those efforts. Constrained by morphological and kinematic data from these previous studies, the model features independent computational parts that simulate different parts of the insect’s body. This includes a biomechanical exoskeleton with articulating body parts, such as head, legs, wings, abdominal segments, proboscis, antennae, halteres (organs that help the fly measure its own orientation while flying), and neural network “controllers” with a motor output.

Why build a digital twin of Drosophila?

“How do we know when we’ve understood a system?” says Ramdya. “One way is to be able to recreate it. We might try to build a robotic fly, but it’s much faster and easier to build a simulated animal. So one of the major motivations behind this work is to start building a model that integrates what we know about the fly’s nervous system and biomechanics to test if it is enough to explain its behavior.”

“When we do experiments, we are often motivated by hypotheses,” he adds. “Until now, we’ve relied upon intuition and logic to formulate hypotheses and predictions. But as neuroscience becomes increasingly complicated, we rely more on models that can bring together many intertwined components, play them out, and predict what might happen if you made a tweak here or there.”

The testbed

NeuroMechFly offers a highly valuable testbed for studies that advance biomechanics and biorobotics, but only in so far as it accurately represents the real animal in a digital environment. Verifying this was one of the researchers’ main concerns. “We performed validation experiments which demonstrate that we can closely replicate the behaviors of the real animal,” says Ramdya.

The researchers first made 3D measurements of real walking and grooming flies. They then replayed those behaviors using NeuroMechFly’s biomechanical exoskeleton inside a physics-based simulation environment.

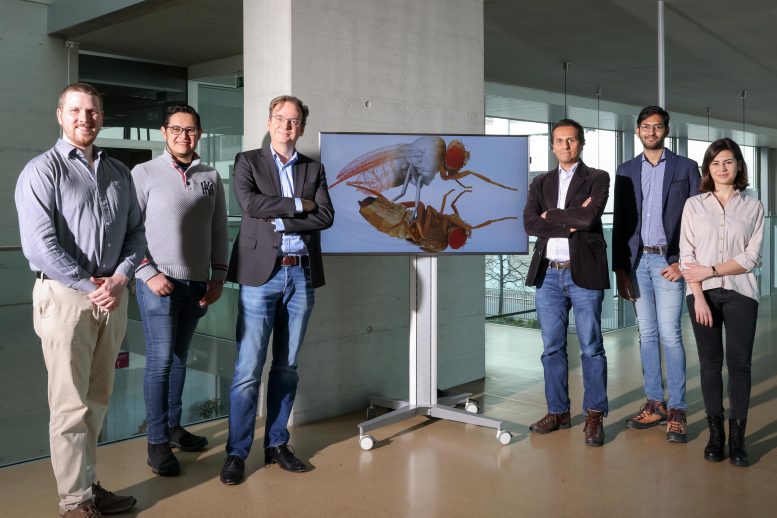

Jonathan Arreguit, Victor Lobato Ríos, Auke Ijspeert, Pavan Ramdya, Shravan Tata Ramalingasetty, and Gizem Özdil. Credit: Alain Herzog (EPFL)

As they show in the paper, the model can actually predict various movement parameters that are otherwise unmeasured, such as the legs’ torques and contact reaction forces with the ground. Finally, they were able to use NeuroMechFly’s full neuromechanical capabilities to discover neural network and muscle parameters that allow the fly to “run” in ways that are optimized for both speed and stability.

“These case studies built our confidence in the model,” says Ramdya. “But we are most interested in when the simulation fails to replicate animal behavior, pointing out ways to improve the model.” Thus, NeuroMechFly represents a powerful testbed for building an understanding of how behaviors emerge from interactions between complex neuromechanical systems and their physical surroundings.

A community effort

Ramdya stresses that NeuroMechFly has been and will continue to be a community project. As such, the software is open source and freely available for scientists to use and modify. “We built a tool, not just for us, but also for others. Therefore, we made it open source and modular, and provide guidelines on how to use and modify it.”

“More and more, progress in science depends on a community effort,” he adds. It’s important for the community to use the model and improve it. But one of the things NeuroMechFly already does is to raise the bar. Before, because models were not very realistic, we didn’t ask how they could be directly informed by data. Here we’ve shown how you can do that; you can take this model, replay behaviors, and infer meaningful information. So this, I think, is a big step forward.”

Reference: “NeuroMechFly, a neuromechanical model of adult Drosophila melanogaster” by Victor Lobato Ríos, Shravan Tata Ramalingasetty, Pembe Gizem Özdil, Jonathan Arreguit, Auke Jan Ijspeert and Pavan Ramdya, 11 May 2022, Nature Methods.

DOI: 10.1038/s41592-022-01466-7

Be the first to comment on "NeuroMechFly: A Morphologically Realistic Biomechanical Model of a Fly"