Astronomers have created AbacusSummit, over 160 simulations depicting how gravity shaped the distribution of dark matter in the universe. With nearly 60 trillion particles in total, this set of cosmological simulations is the largest ever produced.

To understand how the universe formed, astronomers have created AbacusSummit, more than 160 simulations of how gravity may have shaped the distribution of dark matter.

Collectively clocking in at nearly 60 trillion particles, a newly released set of cosmological simulations is by far the biggest ever produced.

The simulation suite, dubbed AbacusSummit, will be instrumental for extracting secrets of the universe from upcoming surveys of the cosmos, its creators predict. They present AbacusSummit in several recently published papers in the Monthly Notices of the Royal Astronomical Society.

AbacusSummit is the product of researchers at the Flatiron Institute’s Center for Computational Astrophysics (CCA) in New York City and the Center for Astrophysics | Harvard & Smithsonian. Made up of more than 160 simulations, it models how particles in the universe move about due to their gravitational attraction. Such models, known as N-body simulations, capture the behavior of the dark matter, a mysterious and invisible force that makes up 27 percent of the universe and interacts only via gravity.

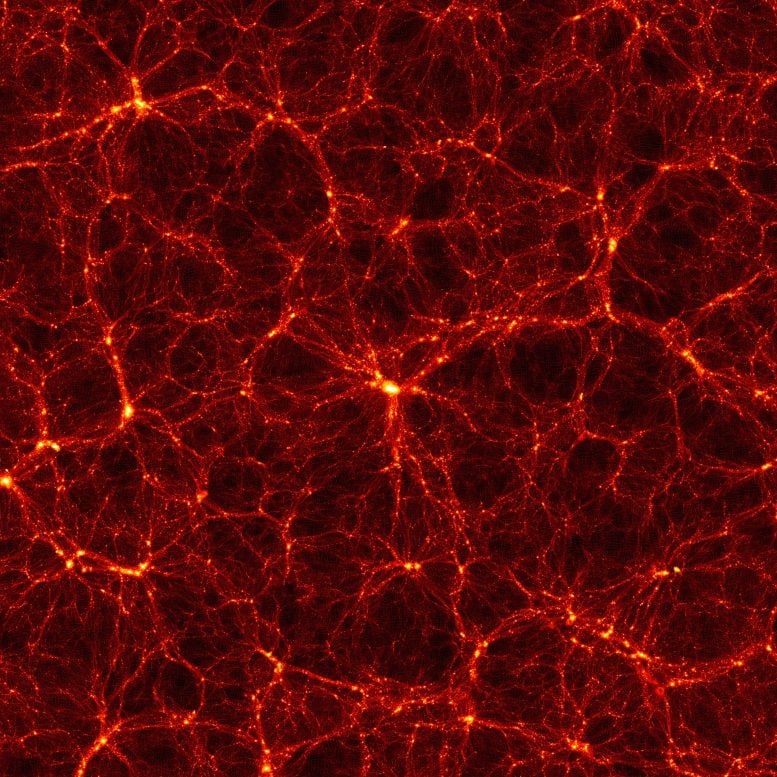

The AbacusSummit suite comprises hundreds of simulations of how gravity shaped the distribution of dark matter throughout the universe. Here, a snapshot of one of the simulations is shown at a zoom scale of 1.2 billion light-years across. The simulation replicates the large-scale structures of our universe, such as the cosmic web and colossal clusters of galaxies. Credit: The AbacusSummit Team; layout and design by Lucy Reading-Ikkanda

“This suite is so big that it probably has more particles than all the other N-body simulations that have ever been run combined — though that’s a hard statement to be certain of,” says Lehman Garrison, lead author of one of the new papers and a CCA research fellow.

Garrison led the development of the AbacusSummit simulations along with graduate student Nina Maksimova and professor of astronomy Daniel Eisenstein, both of the Center for Astrophysics. The simulations ran on the U.S. Department of Energy’s Summit supercomputer at the Oak Ridge Leadership Computing Facility in Tennessee.

Several space surveys will produce maps of the cosmos with unprecedented detail in the coming years. These include the Dark Energy Spectroscopic Instrument (DESI), the Nancy Grace Roman Space Telescope, the Vera C. Rubin Observatory and the Euclid spacecraft. One of the goals of these big-budget missions is to improve estimations of the cosmic and astrophysical parameters that determine how the universe behaves and how it looks.

Scientists will make those improved estimations by comparing the new observations to computer simulations of the universe with different values for the various parameters — such as the nature of the dark energy pulling the universe apart.

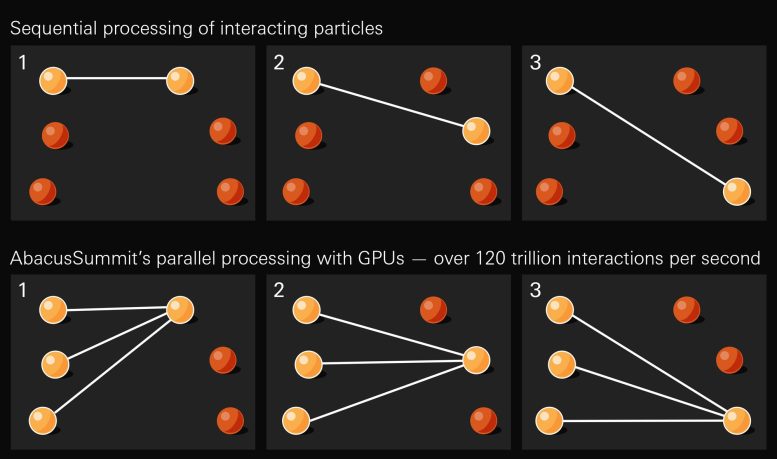

Abacus leverages parallel computer processing to drastically speed up its calculations of how particles move about due to their gravitational attraction. A sequential processing approach (top) computes the gravitational tug between each pair of particles one by one. Parallel processing (bottom) instead divides the work across multiple computing cores, enabling the calculation of multiple particle interactions simultaneously. Credit: Lucy Reading-Ikkanda/Simons Foundation

“The coming generation of cosmological surveys will map the universe in great detail and explore a wide range of cosmological questions,” says Eisenstein, a co-author on the new MNRAS papers. “But leveraging this opportunity requires a new generation of ambitious numerical simulations. We believe that AbacusSummit will be a bold step for the synergy between computation and experiment.”

The decade-long project was daunting. N-body calculations — which attempt to compute the movements of objects, like planets, interacting gravitationally — have been a foremost challenge in the field of physics since the days of Isaac Newton. The trickiness comes from each object interacting with every other object, no matter how far away. That means that as you add more things, the number of interactions rapidly increases.

There is no general solution to the N-body problem for three or more massive bodies. The calculations available are simply approximations. A common approach is to freeze time, calculate the total force acting on each object, then nudge each one based on the net force it experiences. Time is then moved forward slightly, and the process repeats.

Using that approach, AbacusSummit handled colossal numbers of particles thanks to clever code, a new numerical method and lots of computing power. The Summit supercomputer was the world’s fastest at the time the team ran the calculations; it is still the fastest computer in the U.S.

The team designed the codebase for AbacusSummit — called Abacus — to take full advantage of Summit’s parallel processing power, whereby multiple calculations can run simultaneously. In particular, Summit boasts lots of graphical processing units, or GPUs, that excel at parallel processing.

Running N-body calculations using parallel processing requires careful algorithm design because an entire simulation requires a substantial amount of memory to store. That means Abacus can’t just make copies of the simulation for different nodes of the supercomputer to work on. The code instead divides each simulation into a grid. An initial calculation provides a fair approximation of the effects of distant particles at any given point in the simulation (which play a much smaller role than nearby particles). Abacus then groups nearby cells and splits them off so that the computer can work on each group independently, combining the approximation of distant particles with precise calculations of nearby particles.

“The Abacus algorithm is well matched to the capabilities of modern supercomputers, as it provides a very regular pattern of computation for the massive parallelism of GPU co-processors,” Maksimova says.

Thanks to its design, Abacus achieved very high speeds, updating 70 million particles per second per node of the Summit supercomputer, while also performing analysis of the simulations as they ran. Each particle represents a clump of dark matter with 3 billion times the mass of the sun.

“Our vision was to create this code to deliver the simulations that are needed for this particular new brand of galaxy survey,” says Garrison. “We wrote the code to do the simulations much faster and much more accurate than ever before.”

Eisenstein, who is a member of the DESI collaboration — which recently began its survey to map an unprecedented fraction of the universe — says he is eager to use Abacus in the future.

“Cosmology is leaping forward because of the multidisciplinary fusion of spectacular observations and state-of-the-art computing,” he says. “The coming decade promises to be a marvelous age in our study of the historical sweep of the universe.”

Reference: “AbacusSummit: a massive set of high-accuracy, high-resolution N-body simulations” by Nina A Maksimova, Lehman H Garrison, Daniel J Eisenstein, Boryana Hadzhiyska, Sownak Bose and Thomas P Satterthwaite, 7 September 2021, Monthly Notices of the Royal Astronomical Society.

DOI: 10.1093/mnras/stab2484

Additional co-creators of Abacus and AbacusSummit include Sihan Yuan of Stanford University, Philip Pinto of the University of Arizona, Sownak Bose of Durham University in England and Center for Astrophysics researchers Boryana Hadzhiyska, Thomas Satterthwaite and Douglas Ferrer. The simulations ran on the Summit supercomputer under an Advanced Scientific Computing Research Leadership Computing Challenge allocation.

The ray trail of the Bose Effect To Include Cascades

its an optical illusion again im afraid

dazzled by the numbers and the light are u

take a simulation any simulation 1,200,000,000 light years distance

divide it into 5 x 5 squares

each square section 240,000,000 x 240,000,000 light years wide

the large scale structure of the universe the dark energy and matter 95% of the universe the giants of the universe

take any dark shadow thats the large scale structure of the universe the region of space thats dark and in the shade its cold and freezing and nothing moves in this marshy mire its the void like ocean waves of the blue ball planet earth

the cosmic web the planetary planes and the cosmic nodes the planets themselves

tiny filaments of fine spun matter 5% of the universe

and how the dark matter and energy have expanded and has allowed the material world matter 5% to fall into place into a pattern on the sky but really a 3 dimensional shape in the universe

the lilliputians of the universe

each filament is millions of kilometres wide

the dwarfs of the universe 250,000 light years wide galaxies the continents of blue ball planet earth and starlight the islands around the continents of the blue ball planet earth and cosmic dust and cosmic clouds

so to recap a universe 13,700,000,000 light years wide the big picture pic

a simulation 1,200,000,000 light years across the foreground

dark matter bubbles 240,000,000 light years across the shapes

cosmic filaments 50,000,000 light years across the outline the small pic

galaxies 250,000 light years across the bright specks

stars .01 light year across not shown

earth ,0001 invisible

now

take any observation bar a simulation

take one observation the reality out there

does it match this picture

do we have gaping holes of solid cheese at 240.000.000 light years across

are they smaller

are they larger

are they there at all

It’s a simulation of the universe, quite obviously with no ‘illusion’ involved.

If you think else, publish your research in peer reviewed journals.

as u can c i dont do peer review

just freedom of expression

The experimental study of expansion of universe by dark energy(accumulating as matter) through computers applying N-body method for solution is approximate and useful.

This study has the firm assumption that Dark Matter is particles affected by gravitation. A view of String Theory suggests a different approach.

Perhaps Dark Matter appears to us as an effect of string/anti-string annihilations. As you may know, quantum mechanics requires that strings must be formed as pairs in the quantum foam – a string and an anti-string – that immediately annihilate each other. Quantum mechanics also requires both the string and anti-string to be surrounded by “jitters” that reduce their monstrous vibrating energies. What if this jitter remains for a fraction of an instant after their string/anti-string annihilations? This temporary jitter would be seen by us as matter, via E=mc2, for that instant before it too returns to the foam. That’s why we never see it – the “mass” lasts only for that instant but is repeated over and over and over, all over. Specifics on this can be found in my YouTube, Dark Matter – A String Theory Way at https://www.youtube.com/watch?v=N84yISQvGCk

Your PhD in education has failed you: this is the current LCDM cosmology.

String theory is disfavored by experiments, and so are your pseudoscience links.

Thanks for your remarks. As usual, they seem to generate positive interest in my YouTube explanations of how String Theory can answer some of the most difficult questions in astronomy and physics. This may be a kind of good cop/bad cop scenario, with your remarks being such obvious nonsense that curious readers go back to see the power of String Theory. As an active researcher, that’s my goal – to show how String Theory can help to solve these mysteries.

Perhaps gravity isn’t the only thing twisting the matter into shapes in the cosmos. Why is it so hard to believe that electricity on a galactic scale has some part to play?

Because the universe is neutral on large scales – only gravity is dominant as showed by general relativity (and now confirmed in cosmology) long since.

Worse, “Electric Universe” has concurrently moved from being a fringe suggestion to pseudoscience (look it up).

Explaining what “gravity” is, check out: http://www.darkenergyuniverse.com

Warning, another pseudoscience link.

Gravity doesn’t manipulate dark matter. Dark matter creates gravity. Science doesn’t realize this because it must create stars out of gas and dust which leaves dark matter with nothing to do in our universe. It was a massive collision in space that created the pressure and friction needed to create the energy we see to this day.

And this is Dunning-Kruger at its worst, with a completely irrelevant absurd mass of words.

Oh…

🙂

Torbjörn Larsson,

I shall translate a foreign nonlinear concept for a linear being of unstill thoughtforms.

Every word is of relevance in the eye of the beholder, or else there would be no reaction necessary to declare this case.

I shall not externalize further advancements on this discernment as it is on me to declare my, and only my words to be of relevance. To each their own. Past this I only respond to relevance.

“Gravity doesn’t manipulate dark matter.”

In any paradigm this is accurate due to several rational discernments.

1) Gravity is not understood

2) Dark matter is not observed

3) Within the “Equal and opposite reaction”, a resulting force is reaction. Thus, gravity as a force must be caused in reaction of an action to catalyze it.

“Science doesn’t realize this”

The intention of information in the statement is not just in the words. There are interpretations to analyze in the true intent without projecting the self to distort the words as irrelevant. What clarifies the relevance is request for clarification or open discussion. Declaration will only segregate reception of this correction. This communication appears to fall short often on the nonconstructive reactional behavior that fails to serve the messenger or the recipient and so only serves an artificial reality.

“it must create stars out of gas and dust which leaves dark matter with nothing to do in our universe”

Padding is not to be considered as a function of use, there would need to be a preexistence of a catalyst that initiates the observable structures, not as a result of observable structures coming into existence.

“a massive collision in space that created the pressure and friction needed to create the energy we see to this day”

Accurate, but not enough fidelity on the surface in the reasoning. I should not concern myself with this explanation as I feel it is above the receptive observation limit. An incompatible paradigm. I can only entice that I understand fully the working mechanics behind this statement and so it has great relevance.

I am here to excite, inspire, connect, and enable others when I feel that I have a position of capable services. I only work in positivity and acceptance. Belief and trust are irrelevant to me.

I hope I have comforted some here.