With a $20 million U.S. Department of Energy grant, Stanford researchers plan to use some of the world’s fastest supercomputers to model the complexities of hypersonic flight, focusing on how fuel and air flow through a scramjet engine.

Aeronautical engineers believe hypersonic planes flying at seven to 15 times the speed of sound will someday change the face of air and space travel. That is, if they can master such flight’s known unknowns.

Hypersonic flight is a particularly intense engineering challenge both in the mechanical forces placed on the structure of the plane and in the physics of the sophisticated engines that must operate in the extremes of the upper atmosphere where the planes would fly.

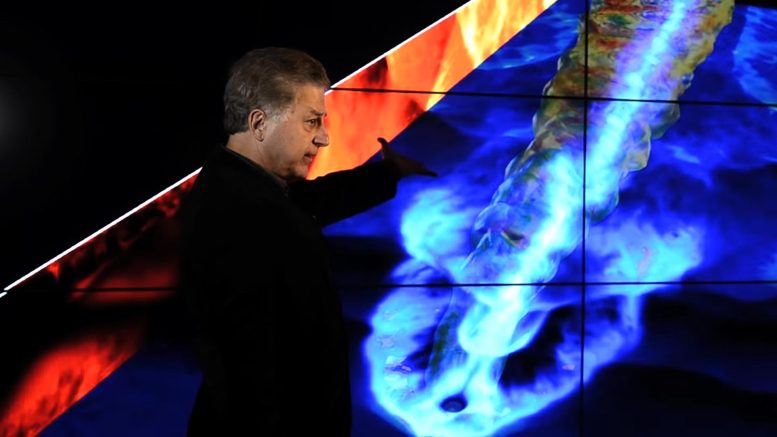

Professor Parviz Moin shows the detail in a simulation of temperature fluctuations from a jet engine’s exhaust. He says it’s one of the largest engineering calculations ever undertaken.

Real-world laboratories can only go so far in reproducing such conditions, and test vehicles are rendered extraordinarily vulnerable. Of the U.S. government’s three most recent tests, two ended in vehicle failure.

But now, thanks to a five-year, $20 million U.S. Department of Energy grant, an interdepartmental, multiyear research effort is under way at Stanford to use some of the world’s fastest supercomputers to tackle these challenges virtually.

The Stanford Predictive Science Academic Alliance Program (PSAAP) is using computers to model the physical complexities of the hypersonic environment – specifically, how fuel and air flow through a hypersonic aircraft engine, known as a scramjet engine.

PSAAP is a collaboration of the departments of mechanical engineering, aeronautics and astronautics, computer science and mathematics, plus Stanford’s Institute for Computational and Mathematical Engineering.

In particular, the program focuses on what is known as the scramjet’s ‘unstart’ problem, said Parviz Moin, the Franklin P. and Caroline M. Johnson Professor in the School of Engineering. He is the founding director of Stanford’s Center for Turbulence Research and faculty director of PSAAP.

“If you put too much fuel in the engine when you try to start it, you get a phenomenon called ‘thermal choking,’ where shock waves propagate back through the engine,” he explained. “Essentially, the engine doesn’t get enough oxygen and it dies. It’s like trying to light a match in a hurricane.”

Modeling the unstart phenomenon requires a clear understanding of the physics and then reproducing mathematically the immensely complex interactions that occur at hypersonic speeds.

It is impossible to model the physical world exactly, said Juan Alonso, an associate professor of aeronautics and astronautics.

“When you base decisions on computations that are in some way imperfect, you make errors,” he said. “Not only that, but these hypersonic vehicles are themselves subject to uncertainties in how they behave in the air.”

As a result, PSAAP’s principal goal is to try to quantify those uncertainties – the known unknowns – so that scramjet engineers can build the appropriate tolerances into their designs to allow the engines to function in extraordinary environments.

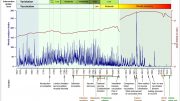

Measuring what the engineers call epistemic uncertainty is common in real-world experimental work, where researchers typically note uncertainties in their measurements in the form of bars that have upper and lower limits. But hard numbers generated by computer models do not have uncertainty bars and therefore can take on an unwarranted, potentially dangerous air of certainty.

“We have asked experimentalists for a long time to give us uncertainty bars on their measurements,” said Moin. “But I think the time is right to ask the computationalists to do the same.”

Quantification of uncertainties in numerical predictions is at the core of PSAAP. Gianluca Iaccarino, assistant professor of mechanical engineering, is leading this effort.

Innovations in computing

One reason computational uncertainty quantification is a relatively new science is that, until recently, the necessary computer resources simply didn’t exist.

“Some of our latest calculations run on 163,000 processors simultaneously,” Moin said. “I think they’re some of the largest calculations ever undertaken.”

Thanks to its close relationship with the Department of Energy, however, the Stanford PSAAP team enjoys access to the massive computer facilities at the Lawrence Livermore, Los Alamos, and Sandia national laboratories, where their largest and most complex simulations can be run.

It takes specialized knowledge to get computers of this scale to perform effectively, however.

“And that’s not something scientists and engineers should be worrying about,” said Alonso, which is why the collaboration between departments is critical.

“Mechanical engineers and those of us in aeronautics and astronautics understand the flow and combustion physics of scramjet engines and the predictive tools. We need the computer scientists to help us figure out how to run these tests on these large computers,” he said.

That need will only increase over the next decade as supercomputers move toward the exascale – computers with a million or more processors able to execute a quintillion calculations in a single second.

Thinking ahead to that day, the PSAAP team, led by computer science Professor Pat Hanrahan, has created LISZT, an entirely new computer language for running complex simulations on massive processor sets.

The great virtue of LISZT is its ability to directly express problems in engineering and science through code designed specifically for exascale architectures. That makes it equally accessible to experts working in fluid physics, combustion, turbulence and other mathematically intense applications while at the same time remaining highly computationally efficient.

LISZT seems to be one of the most viable methods – and some say only method – for doing real scientific modeling at the exascale, a distinction that has it attracting widespread international interest.

“It’s something you could never have created unless you put computer scientists, mathematicians, mechanical engineers and aerospace engineers together in the same room,” said Alonso. “Do it, though, and you can produce some really magical results.”

Advances in several dimensions

Beyond progress in the treatment of epistemic uncertainty in computer modeling and the creation of LISZT, the Stanford PSAAP team has made key advances in the specific disciplines that underpin scramjet engineering: combustion, turbulence and fluid flow in general.

And while the challenges of running an actual scramjet engine in a wind tunnel working at hypersonic speeds remain daunting, the Stanford PSAAP project is unique in that it has supported advances in physical experimentation.

“We’ve had strong validation of our computational work against experiments,” said Moin. “In particular, in the High Temperature Gasdynamics Lab and the Flow Physics group, we’ve developed a number of new techniques to validate how we build physical models into our computer code.”

Collectively, these insights will enable the design of safer, more reliable hypersonic engines. But PSAAP’s advances in quantifying uncertainty have other, far broader implications, said Alonso.

“These same technologies can be used to quantify flow of air around wind farms, for example, or for complex global climate models,” he said. “I was in Los Alamos talking with people who are interested in global climate, and guess what? Just like the models for the scramjet, right now their climate models are far from perfect, but it doesn’t stop them from pushing ahead.”

Be the first to comment on "Engineers Use Supercomputers to Tackle the Challenges of Hypersonic Flight"