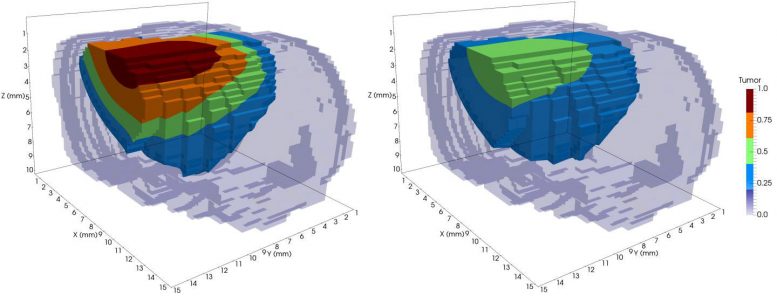

This is a model of tumor growth in a rat brain before radiation treatment (left) and after one session of radiotherapy (right). The different colors represent tumor cell concentration, with red being the highest. The treatment reduced the tumor mass substantially. Credit: Lima et. al. 2017, Hormuth et. al. 2015

Attempts to eradicate cancer are often compared to a “moonshot” — the successful effort that sent the first astronauts to the moon.

But imagine if, instead of Newton’s second law of motion, which describes the relationship between an object’s mass and the amount of force needed to accelerate it, we only had reams of data related to throwing various objects into the air.

This, says Thomas Yankeelov, approximates the current state of cancer research: data-rich, but lacking governing laws and models.

The solution, he believes, is not to mine large quantities of patient data, as some insist, but to mathematize cancer: to uncover the fundamental formulas that represent how cancer, in its many varied forms, behaves.

“We’re trying to build models that describe how tumors grow and respond to therapy,” said Yankeelov, director of the Center for Computational Oncology at The University of Texas at Austin (UT Austin) and director of Cancer Imaging Research in the LIVESTRONG Cancer Institutes of the Dell Medical School. “The models have parameters in them that are agnostic, and we try to make them very specific by populating them with measurements from individual patients.”

The Center for Computational Oncology (part of the broader Institute for Computational Engineering and Sciences, or ICES) is developing complex computer models and analytic tools to predict how cancer will progress in a specific individual, based on their unique biological characteristics.

In December 2017, writing in Computer Methods in Applied Mechanics and Engineering, Yankeelov and collaborators at UT Austin and Technical University of Munich, showed that they can predict how brain tumors (gliomas) will grow and respond to X-ray radiation therapy with much greater accuracy than previous models. They did so by including factors like the mechanical forces acting on the cells and the tumor’s cellular heterogeneity. The paper continues research first described in the Journal of The Royal Society Interface in April 2017.

“We’re at the phase now where we’re trying to recapitulate experimental data so we have confidence that our model is capturing the key factors,” he said.

To develop and implement their mathematically complex models, the group uses the advanced computing resources at the Texas Advanced Computing Center (TACC). TACC’s supercomputers enable researchers to solve bigger problems than they otherwise could and reach solutions far faster than with a single computer or campus cluster.

According to ICES Director J. Tinsley Oden, mathematical models of the invasion and growth of tumors in living tissue have been “smoldering in the literature for a decade,” and in the last few years, significant advances have been made.

“We’re making genuine progress to predict the growth and decline of cancer and reactions to various therapies,” said Oden, a member of the National Academy of Engineering.

MODEL SELECTION AND TESTING

Over the years, many different mathematical models of tumor growth have been proposed, but determining which is most accurate at predicting cancer progression is a challenge.

In October 2016, writing in Mathematical Models and Methods in Applied Sciences, the team used a study of cancer in rats to test 13 leading tumor growth models to determine which could predict key quantities of interest relevant to survival, and the effects of various therapies.

They applied the principle of Occam’s razor, which says that where two explanations for an occurrence exist, the simpler one is usually better. They implemented this principle through the development and application of something they call the “Occam Plausibility Algorithm,” which selects the most plausible model for a given dataset and determines if the model is a valid tool for predicting tumor growth and morphology.

The method was able to predict how large the rat tumors would grow within 5 to 10 percent of their final mass.

“We have examples where we can gather data from lab animals or human subjects and make startlingly accurate depictions about the growth of cancer and the reaction to various therapies, like radiation and chemotherapy,” Oden said.

The team analyzes patient-specific data from magnetic resonance imaging (MRI), positron emission tomography (PET), x-ray computed tomography (CT), biopsies, and other factors, in order to develop their computational model.

Each factor involved in the tumor response — whether it is the speed with which chemotherapeutic drugs reach the tissue or the degree to which cells signal each other to grow — is characterized by a mathematical equation that captures its essence.

“You put mathematical models on a computer and tune them and adapt them and learn more,” Oden said. “It is, in a way, an approach that goes back to Aristotle, but it accesses the most modern levels of computing and computational science.”

The group tries to model biological behavior at the tissue, cellular and cell signaling levels. Some of their models involve 10 species of tumor cells and include elements like cell connective tissue, nutrients and factors related to the development of new blood vessels. They have to solve partial differential equations for each of these elements and then intelligently couple them to all the other equations.

“This is one of the most complicated projects in computational science. But you can do anything with a supercomputer,” Oden said. “There’s a cascading list of models at different scales that talk to each other. Ultimately, we’re going to need to learn to calibrate each and compute their interactions with each other.”

FROM COMPUTER TO CLINIC

The research team at UT Austin — which comprises 30 faculty, students, and postdocs — doesn’t only develop mathematical and computer models. Some researchers work with cell samples in vitro; some do pre-clinical work in mice and rats. And recently, the group has begun a clinical study to predict, after one treatment, how an individual’s cancer will progress, and use that prediction to plan the future course of treatment.

At Vanderbilt University, Yankeelov’s previous institution, his group was able to predict with 87 percent accuracy whether a breast cancer patient would respond positively to treatment after just one cycle of therapy. They are trying to reproduce those results in a community setting and extend their models by adding new factors that describe how the tumor evolves.

The combination of mathematical modeling and high-performance computing may be the only way to overcome the complexity of cancer, which is not one disease but more than a hundred, each with numerous sub-types.

“There are not enough resources or patients to sort this problem out because there are too many variables. It would take until the end of time,” Yankeelov said. “But if you have a model that can recapitulate how tumors grow and respond to therapy, then it becomes a classic engineering optimization problem. ‘I have this much drug and this much time. What’s the best way to give it to minimize the number of tumor cells for the longest amount of time?'”

Computing at TACC has helped Yankeelov accelerate his research. “We can solve problems in a few minutes that would take us 3 weeks to do using the resources at our old institution,” he said. “It’s phenomenal.”

According to Oden and Yankeelov, there are very few research groups trying to sync clinical and experimental work with computational modeling and state-of-the-art resources like the UT Austin group.

“There’s a new horizon here, a more challenging future ahead where you go back to basic science and make concrete predictions about health and well-being from first principles,” Oden said.

Said Yankeelov: “The idea of taking each patient as an individual to populate these models to make a specific prediction for them and someday be able to take their model and then try on a computer a whole bunch of therapies on them to optimize their individual therapy — that’s the ultimate goal and I don’t know how you can do that without mathematizing the problem.”

Reference: “Selection and validation of predictive models of radiation effects on tumor growth based on noninvasive imaging data”by E.A.B.F. Lima, J.T. Oden, B. Wohlmuth, A. Shahmoradi, D.A. Hormuth II, T.E. Yankeelov, L. Scarabosio and T. Horger, 23 November 2017, Computer Methods in Applied Mechanics and Engineering.

DOI: 10.1016/j.cma.2017.08.009

Be the first to comment on "Supercomputers Help Tailor Cancer Treatments to Individual Patients"