Scientists have developed a method to give objects like smartphones a bat-like sense of their surroundings, using a sophisticated machine-learning algorithm based on echolocation. Credit: University of Glasgow

Scientists have found a way to equip everyday objects like smartphones and laptops with a bat-like sense of their surroundings.

At the heart of the technique is a sophisticated machine-learning algorithm that uses reflected echoes to generate images, similar to the way bats navigate and hunt using echolocation.

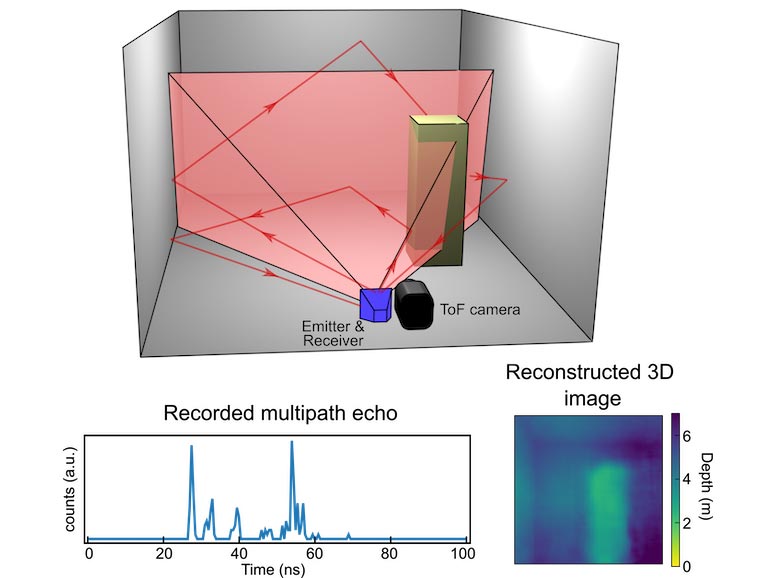

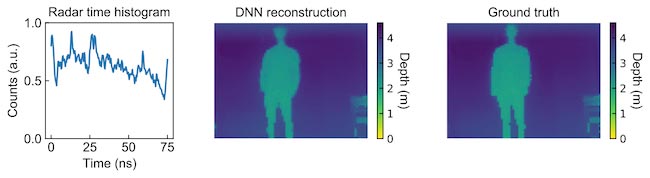

The algorithm measures the time it takes for blips of sound emitted by speakers or radio waves pulsed from small antennas to bounce around inside an indoor space and return to the sensor.

By cleverly analyzing the results, the algorithm can deduce the shape, size, and layout of a room, as well as pick out in the presence of objects or people. The results are displayed as a video feed which turns the echo data into three-dimensional vision.

One key difference between the team’s achievement and the echolocation of bats is that bats have two ears to help them navigate, while the algorithm is tuned to work with data collected from a single point, like a microphone or a radio antenna.

The researchers say that the technique could be used to generate images through potentially any devices equipped with microphones and speakers or radio antennae.

The research, outlined in a paper published today by computing scientists and physicists from the University of Glasgow in the journal Physical Review Letters, could have applications in security and healthcare.

Dr. Alex Turpin and Dr. Valentin Kapitany, of the University of Glasgow’s School of Computing Science and School of Physics and Astronomy, are the lead authors of the paper.

Dr. Turpin said: “Echolocation in animals is a remarkable ability, and science has managed to recreate the ability to generate three-dimensional images from reflected echoes in a number of different ways, like RADAR and LiDAR.

“What sets this research apart from other systems is that, firstly, it requires data from just a single input – the microphone or the antenna – to create three-dimensional images. Secondly, we believe that the algorithm we’ve developed could turn any device with either of those pieces of kit into an echolocation device.

“That means that the cost of this kind of 3D imaging could be greatly reduced, opening up many new applications. A building could be kept secure without traditional cameras by picking up the signals reflected from an intruder, for example. The same could be done to keep track of the movements of vulnerable patients in nursing homes. We could even see the system being used to track the rise and fall of a patient’s chest in healthcare settings, alerting staff to changes in their breathing.”

The paper outlines how the researchers used the speakers and microphone from a laptop to generate and receive acoustic waves in the kilohertz range. They also used an antenna to do the same with radio-frequency sounds in the gigahertz range.

In each case, they collected data about the reflections of the waves taken in a room as a single person moved around. At the same time, they also recorded data about the room using a special camera which uses a process known as time-of-flight to measure the dimensions of the room and provide a low-resolution image.

By combining the echo data from the microphone and the image data from the time-of-flight camera, the team ‘trained’ their machine-learning algorithm over hundreds of repetitions to associate specific delays in the echoes with images. Eventually, the algorithm had learned enough to generate its own highly accurate images of the room and its contents from the echo data alone, giving it the ‘bat-like’ ability to sense its surroundings.

The research builds on previous work by the team, which trained a neural-network algorithm to build three-dimensional images by measuring the reflections from flashes of light using a single-pixel detector.

Dr. Turpin added: “We’ve now been able to demonstrate the effectiveness of this algorithmic machine-learning technique using light and sound, which is very exciting. It’s clear that there is a lot of potential here for sensing the world in new ways, and we’re keen to continue exploring the possibilities of generating more high-resolution images in the future.”

Reference: “3D Imaging from Multipath Temporal Echoes” by Alex Turpin, Valentin Kapitany, Jack Radford, Davide Rovelli, Kevin Mitchell, Ashley Lyons, Ilya Starshynov and Daniele Faccio, 30 April 2021, Physical Review Letters.

DOI: 10.1103/PhysRevLett.126.174301

The team’s paper is published in Physical Review Letters. The research was supported by funding from the Royal Academy of Engineering and the Engineering and Physical Sciences Research Council (EPSRC), part of UK Research and Innovation (UKRI).

Be the first to comment on "“Bat-Sense” Technology for Smartphones Generates Images From Sound"