A recent study found that the AI chatbot, ChatGPT, provided 97% correct responses to common cancer myths and misconceptions, but raised concerns due to its indirect and potentially confusing language, emphasizing the need for caution in advising patients to use chatbots for cancer information.

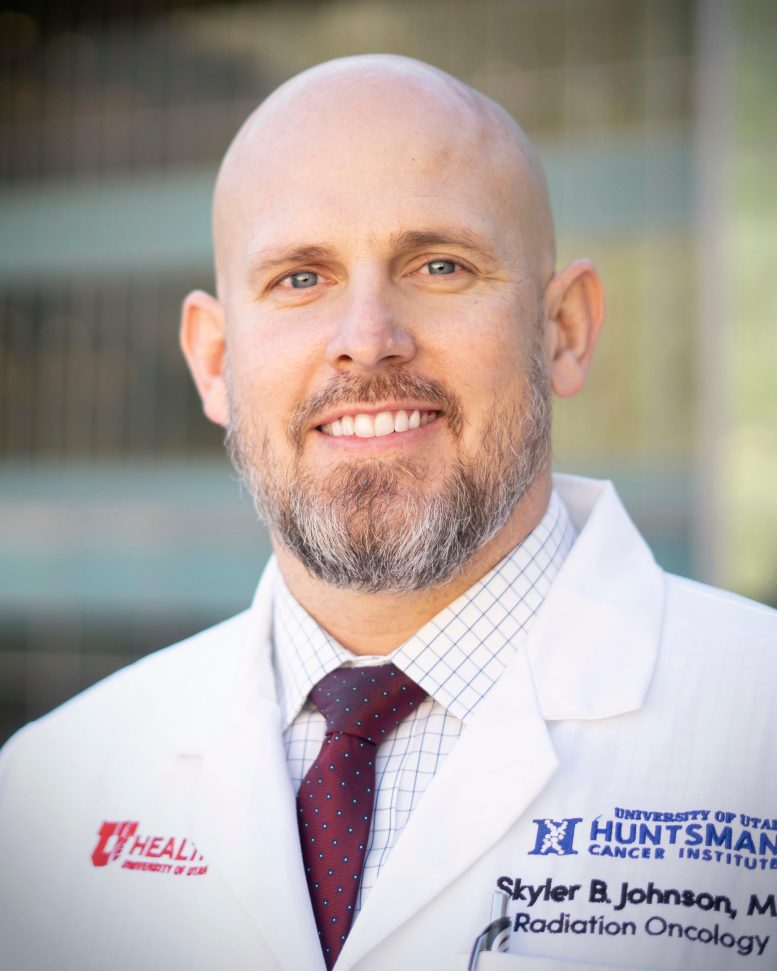

A study published in the Journal of The National Cancer Institute Cancer Spectrum delved into the increasing use of chatbots and artificial intelligence (AI) in providing cancer-related information. The researchers discovered that these digital resources accurately debunk common myths and misconceptions about cancer. This pioneering research was conducted by Skyler Johnson, MD, a physician-scientist at the Huntsman Cancer Institute and an assistant professor in the department of radiation oncology at the University of Utah. His aim was to assess the dependability and accuracy of cancer information provided by ChatGPT.

Skyler Johnson, MD. Credit: Huntsman Cancer Institute

Johnson and his team used the National Cancer Institute’s (NCI) list of frequent cancer myths and misconceptions as a testing ground. They found that a significant 97% of the answers provided by ChatGPT were accurate. Nevertheless, this result is accompanied by noteworthy caveats. One significant concern raised by the team was the potential for some of ChatGPT’s answers to be misunderstood or misinterpreted.

“This could lead to some bad decisions by cancer patients. The team suggested caution when advising patients about whether they should use chatbots for information about cancer,” says Johnson.

The study found reviewers were blinded, meaning they didn’t know whether the answers came from the chatbot or the NCI. Though the answers were accurate, reviewers found ChatGPT’s language was indirect, vague, and in some cases, unclear.

“I recognize and understand how difficult it can feel for cancer patients and caregivers to access accurate information,” says Johnson. “These sources need to be studied so that we can help cancer patients navigate the murky waters that exist in the online information environment as they try to seek answers about their diagnoses.”

Incorrect information can harm cancer patients. In a previous study by Johnson and his team published in the Journal of the National Cancer Institute, they found that misinformation was common on social media and had the potential to harm cancer patients.

The next steps are to evaluate how often patients are using chatbots to seek out information about cancer, what questions they are asking, and whether AI chatbots provide accurate answers to uncommon or unusual questions about cancer.

Reference: “Using ChatGPT to evaluate cancer myths and misconceptions: artificial intelligence and cancer information” by Skyler B Johnson, Andy J King, Echo L Warner, Sanjay Aneja, Benjamin H Kann and Carma L Bylund, 17 March 2023, Journal of The National Cancer Institute – Cancer Spectrum.

DOI: 10.1093/jncics/pkad015

The study was funded by the National Cancer Institute and Huntsman Cancer Foundation.

ChatGPT cannot be trusted at all. The left-wingnut racist and sexist bias the thing has, no one should trust it beyond anything but a toy.

This bot gave me a list of 10 words 8 of which started with a C. I asked it for a list of words that started with an L… when will you Americans understand that this thing is trash still