The New Shepard (NS) booster lands after this vehicle’s fifth flight during NS-11 May 2, 2019. Credit: Blue Origin

Some of the most interesting places to study in our solar system are found in the most inhospitable environments – but landing on any planetary body is already a risky proposition. With NASA planning robotic and crewed missions to new locations on the Moon and Mars, avoiding landing on the steep slope of a crater or in a boulder field is critical to helping ensure a safe touch down for surface exploration of other worlds. In order to improve landing safety, NASA is developing and testing a suite of precise landing and hazard-avoidance technologies.

A combination of laser sensors, a camera, a high-speed computer, and sophisticated algorithms will give spacecraft the artificial eyes and analytical capability to find a designated landing area, identify potential hazards, and adjust course to the safest touchdown site. The technologies developed under the Safe and Precise Landing – Integrated Capabilities Evolution (SPLICE) project within the Space Technology Mission Directorate’s Game Changing Development program will eventually make it possible for spacecraft to avoid boulders, craters, and more within landing areas half the size of a football field already targeted as relatively safe.

A new suite of lunar landing technologies, called Safe and Precise Landing – Integrated Capabilities Evolution (SPLICE), will enable safer and more accurate lunar landings than ever before. Future Moon missions could use NASA’s advanced SPLICE algorithms and sensors to target landing sites that weren’t possible during the Apollo missions, such as regions with hazardous boulders and nearby shadowed craters. SPLICE technologies could also help land humans on Mars. Credit: NASA

Three of SPLICE’s four main subsystems will have their first integrated test flight on a Blue Origin New Shepard rocket during an upcoming mission. As the rocket’s booster returns to the ground, after reaching the boundary between Earth’s atmosphere and space, SPLICE’s terrain relative navigation, navigation Doppler lidar, and descent and landing computer will run onboard the booster. Each will operate in the same way they will when approaching the surface of the Moon.

The fourth major SPLICE component, a hazard detection lidar, will be tested in the future via ground and flight tests.

Following Breadcrumbs

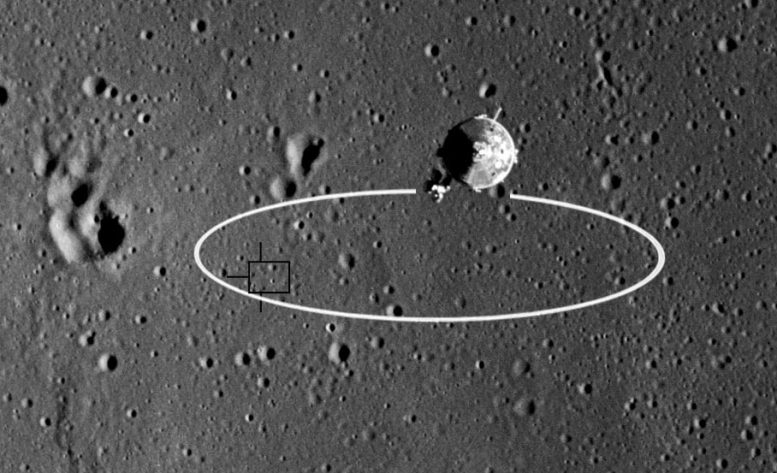

When a site is chosen for exploration, part of the consideration is to ensure enough room for a spacecraft to land. The size of the area, called the landing ellipse, reveals the inexact nature of legacy landing technology. The targeted landing area for Apollo 11 in 1968 was approximately 11 miles by 3 miles (18 kilometers by 5 kilometers), and astronauts piloted the lander. Subsequent robotic missions to Mars were designed for autonomous landings. Viking arrived on the Red Planet 10 years later with a target ellipse of 174 miles by 62 miles (280 kilometers by 100 kilometers).

The Apollo 11 landing ellipse, shown here, was 11 miles by 3 miles. Precision landing technology will reduce landing area drastically, allowing for multiple missions to land in the same region. Credit: NASA

Technology has improved, and subsequent autonomous landing zones decreased in size. In 2012, the Curiosity rover landing ellipse was down to 12 miles by 4 miles (19 kilometers by 6 kilometers).

Being able to pinpoint a landing site will help future missions target areas for new scientific explorations in locations previously deemed too hazardous for an unpiloted landing. It will also enable advanced supply missions to send cargo and supplies to a single location, rather than spread out over miles.

Each planetary body has its own unique conditions. That’s why “SPLICE is designed to integrate with any spacecraft landing on a planet or moon,” said project manager Ron Sostaric. Based at NASA’s Johnson Space Center in Houston, Sostaric explained the project spans multiple centers across the agency.

Terrain relative navigation provides a navigation measurement by comparing real-time images to known maps of surface features during descent. Credit: NASA

“What we’re building is a complete descent and landing system that will work for future Artemis missions to the Moon and can be adapted for Mars,” he said. “Our job is to put the individual components together and make sure that it works as a functioning system.”

Atmospheric conditions might vary, but the process of descent and landing is the same. The SPLICE computer is programmed to activate terrain relative navigation several miles above the ground. The onboard camera photographs the surface, taking up to 10 pictures every second. Those are continuously fed into the computer, which is preloaded with satellite images of the landing field and a database of known landmarks.

Algorithms search the real-time imagery for the known features to determine the spacecraft’s location and navigate the craft safely to its expected landing point. It’s similar to navigating via landmarks, like buildings, rather than street names.

In the same way, terrain relative navigation identifies where the spacecraft is and sends that information to the guidance and control computer, which is responsible for executing the flight path to the surface. The computer will know approximately when the spacecraft should be nearing its target, almost like laying breadcrumbs and then following them to the final destination.

This process continues until approximately four miles above the surface.

Laser Navigation

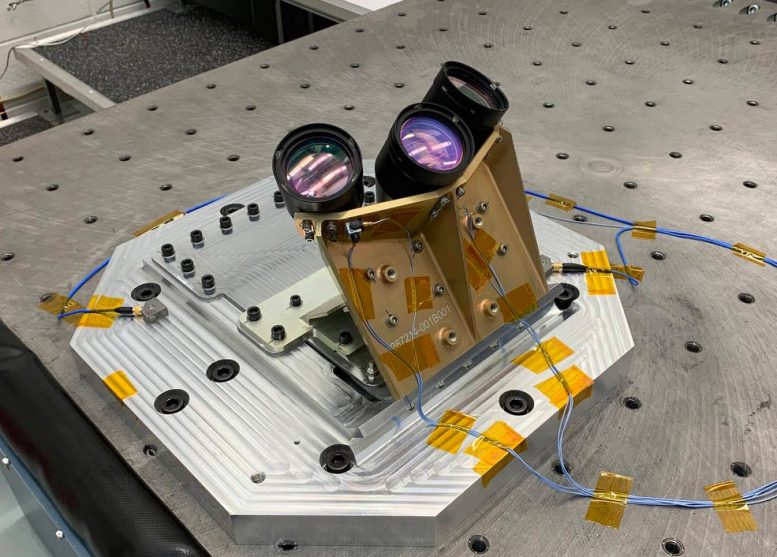

Knowing the exact position of a spacecraft is essential for the calculations needed to plan and execute a powered descent to precise landing. Midway through the descent, the computer turns on the navigation Doppler lidar to measure velocity and range measurements that further add to the precise navigation information coming from terrain relative navigation. Lidar (light detection and ranging) works in much the same way as a radar but uses light waves instead of radio waves. Three laser beams, each as narrow as a pencil, are pointed toward the ground. The light from these beams bounces off the surface, reflecting back toward the spacecraft.

NASA’s navigation Doppler lidar instrument is comprised of a chassis, containing electro-optic and electronic components, and an optical head with three telescopes. Credit: NASA

The travel time and wavelength of that reflected light are used to calculate how far the craft is from the ground, what direction it’s heading, and how fast it’s moving. These calculations are made 20 times per second for all three laser beams and fed into the guidance computer.

Doppler lidar works successfully on Earth. However, Farzin Amzajerdian, the technology’s co-inventor and principal investigator from NASA’s Langley Research Center in Hampton, Virginia, is responsible for addressing the challenges for use in space.

“There are still some unknowns about how much signal will come from the surface of the Moon and Mars,” he said. If material on the ground is not very reflective, the signal back to the sensors will be weaker. But Amzajerdian is confident the lidar will outperform radar technology because the laser frequency is orders of magnitude greater than radio waves, which enables far greater precision and more efficient sensing.

Langley engineer John Savage inspects a section of the navigation Doppler lidar unit after its manufacture from a block of metal. Credit: NASA/David C. Bowman

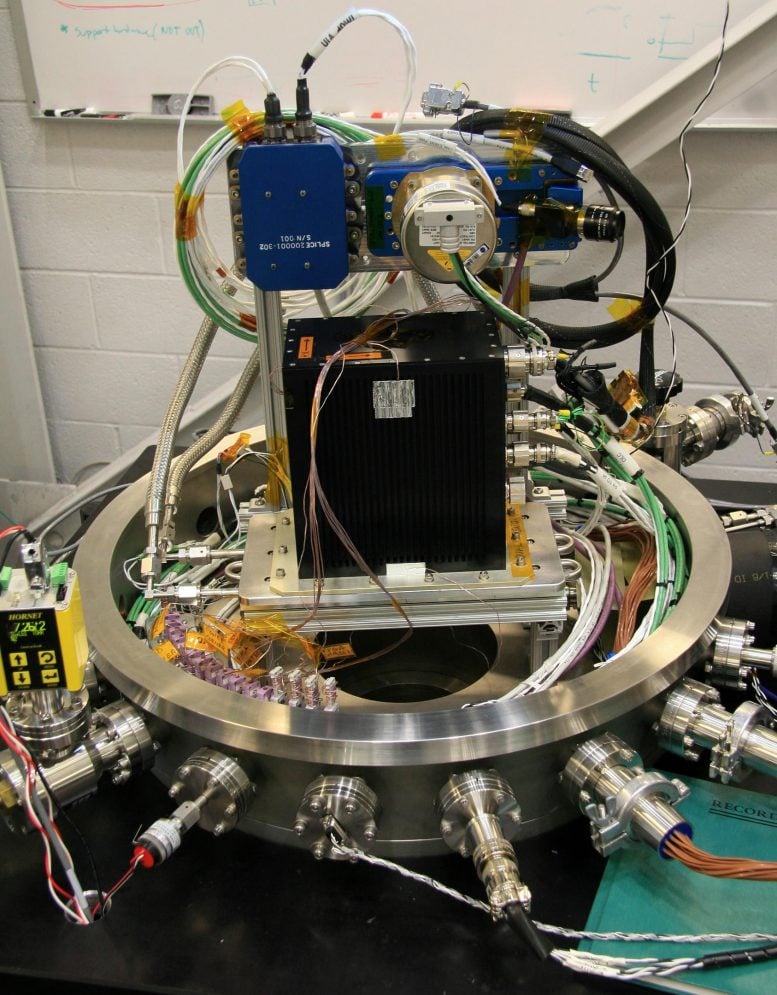

The workhorse responsible for managing all of this data is the descent and landing computer. Navigation data from the sensor systems is fed to onboard algorithms, which calculate new pathways for a precise landing.

Computer Powerhouse

The descent and landing computer synchronizes the functions and data management of individual SPLICE components. It must also integrate seamlessly with the other systems on any spacecraft. So, this small computing powerhouse keeps the precision landing technologies from overloading the primary flight computer.

The computational needs identified early on made it clear that existing computers were inadequate. NASA’s high-performance spaceflight computing processor would meet the demand but is still several years from completion. An interim solution was needed to get SPLICE ready for its first suborbital rocket flight test with Blue Origin on its New Shepard rocket. Data from the new computer’s performance will help shape its eventual replacement.

SPLICE hardware undergoing preparations for a vacuum chamber test. Three of SPLICE’s four main subsystems will have their first integrated test flight on a Blue Origin New Shepard rocket. Credit: NASA

John Carson, the technical integration manager for precision landing, explained that “the surrogate computer has very similar processing technology, which is informing both the future high-speed computer design, as well as future descent and landing computer integration efforts.”

Looking forward, test missions like these will help shape safe landing systems for missions by NASA and commercial providers on the surface of the Moon and other solar system bodies.

“Safely and precisely landing on another world still has many challenges,” said Carson. “There’s no commercial technology yet that you can go out and buy for this. Every future surface mission could use this precision landing capability, so NASA’s meeting that need now. And we’re fostering the transfer and use with our industry partners.”

“The size of the area, called the landing ellipse, reveals the inexact nature of legacy landing technology. The targeted landing area for Apollo 11 in 1968 was approximately 11 miles by 3 miles,”

The article omitted to mention that the “inexact legacy landing technology” then became so…exact… that the subsequent Apollo missions landed right on the spot marked with the big X. In fact, by Apollo 12, the system was so exact that Pete Conrad was asked by one of the programmers if he wanted to land on the edge of the crater containing Surveyor 3, or *in* the crater.

Conrad opted for the edge.