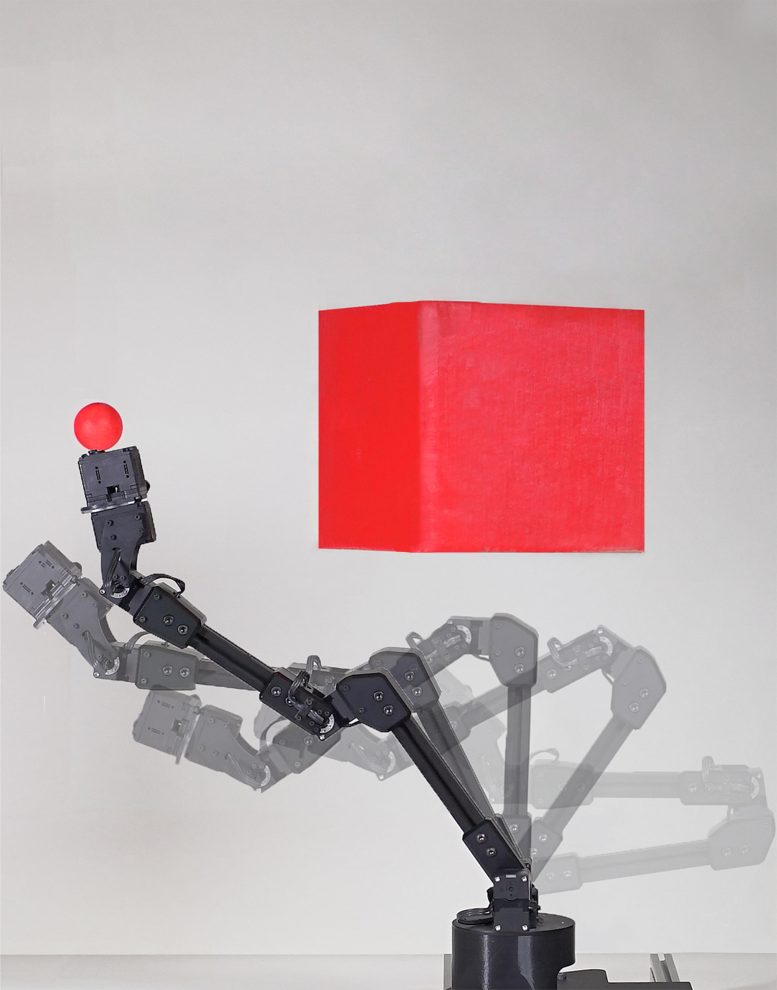

An artist’s concept of a robot learning to imagine itself.

A robot created by Columbia Engineers learns to understand itself rather than the environment around it.

Our perception of our bodies is not always correct or realistic, as any athlete or fashion-conscious person knows, but it’s a crucial factor in how we behave in society. Your brain is continuously preparing for movement while you play ball or get dressed so that you can move your body without bumping, tripping, or falling.

Humans develop our body models as infants, and robots are starting to do the same. A team at Columbia Engineering revealed today that they have developed a robot that, for the first time, can learn a model of its whole body from scratch without any human aid. The researchers explain how their robot built a kinematic model of itself in a recent paper published in Science Robotics, and how it utilized that model to plan movements, accomplish objectives, and avoid obstacles in a range of scenarios. Even damage to its body was automatically detected and corrected.

A robot can learn full-body morphology via visual self-modeling to adapt to multiple motion planning and control tasks. Credit: Jane Nisselson and Yinuo Qin/ Columbia Engineering

The robot watches itself like an infant exploring itself in a hall of mirrors

The researchers placed a robotic arm within a circle of five streaming video cameras. The robot watched itself through the cameras as it undulated freely. Like an infant exploring itself for the first time in a hall of mirrors, the robot wiggled and contorted to learn how exactly its body moved in response to various motor commands. After about three hours, the robot stopped. Its internal deep neural network had finished learning the relationship between the robot’s motor actions and the volume it occupied in its environment.

“We were really curious to see how the robot imagined itself,” said Hod Lipson, professor of mechanical engineering and director of Columbia’s Creative Machines Lab, where the work was done. “But you can’t just peek into a neural network, it’s a black box.” After the researchers struggled with various visualization techniques, the self-image gradually emerged. “It was a sort of gently flickering cloud that appeared to engulf the robot’s three-dimensional body,” said Lipson. “As the robot moved, the flickering cloud gently followed it.” The robot’s self-model was accurate to about 1% of its workspace.

A technical summary of the study. Credit: Columbia Engineering

Self-modeling robots will lead to more self-reliant autonomous systems

The ability of robots to model themselves without being assisted by engineers is important for many reasons: Not only does it save labor, but it also allows the robot to keep up with its own wear-and-tear, and even detect and compensate for damage. The authors argue that this ability is important as we need autonomous systems to be more self-reliant. A factory robot, for instance, could detect that something isn’t moving right, and compensate or call for assistance.

“We humans clearly have a notion of self,” explained the study’s first author Boyuan Chen, who led the work and is now an assistant professor at Duke University. “Close your eyes and try to imagine how your own body would move if you were to take some action, such as stretch your arms forward or take a step backward. Somewhere inside our brain we have a notion of self, a self-model that informs us what volume of our immediate surroundings we occupy, and how that volume changes as we move.”

Self-awareness in robots

The work is part of Lipson’s decades-long quest to find ways to grant robots some form of self-awareness. “Self-modeling is a primitive form of self-awareness,” he explained. “If a robot, animal, or human, has an accurate self-model, it can function better in the world, it can make better decisions, and it has an evolutionary advantage.”

The researchers are aware of the limits, risks, and controversies surrounding granting machines greater autonomy through self-awareness. Lipson is quick to admit that the kind of self-awareness demonstrated in this study is, as he noted, “trivial compared to that of humans, but you have to start somewhere. We have to go slowly and carefully, so we can reap the benefits while minimizing the risks.”

Reference: “Fully body visual self-modeling of robot morphologies” by Boyuan Chen, Robert Kwiatkowski, Carl Vondrick and Hod Lipson, 13 July 2022, Science Robotics.

DOI: 10.1126/scirobotics.abn1944

The study was funded by the Defense Advanced Research Projects Agency, the National Science Foundation, Facebook, and Northrop Grumman.

The authors declare no financial or other conflicts of interest.

This should worry people a lot more than it does now.

If it will get full self awareness maybe it will be decaled as a human, and it will have have all human right.

Rather simplistic and overreaching definition of “SELF.”

Yall are constantly wanting to put more computers or robots in place of love human beings and look what it’s caused already people getting bankrupt, identity stolen, scams of ssi and ssa and also impersonating officers first responders are yall forcing or wanting to be extinct, bc yall already don’t pay attention to what’s going on outside much less the environment around you

First time? That’s a mighty big (false) claim. Here’s a clip of a robot imagining itself over 10 years before this paper was published: https://youtube.com/clip/UgkxFwjDDA-wveKREg4jRlC-3tkZ73QZbJnd

Here’s the original video:

https://youtu.be/iNL5-0_T1D0

Thanks to Windows, my computers are aware of problems within themselves and can initiate repairs and patches on their own. Essentially that is self-awareness, at least at a simple level.

Such hype lmao, my camera can focus it’s lens I guess it’s self aware of how far it is from things

One of the points of a Robot is that it doesn’t have self awareness

“Self image” is a concept that arises into consciousness. It means nothing if consciousness is not present. There is no consciousness in machines or computers, nor is there any “self image.” The idea of a self image, is projected onto machines, only by confused humans who have little to no understanding of their own consciousness.

Until robots are DNA based, they won’t have consciousness, or a self image, or “know” anything. Thinking otherwise is like a child getting fooled into thinking a chat bot is a real person. Their minds are not developed enough yet, to see the difference.

Good article

Have the authors of this article actually SEEN the movies Terminator or Terminator 2 just wondering!!🙂

Funded by: DARPA, Facebook, Northrup Grumman, NSF

As we know all to well, the first three have our best interests in their hearts.

Follow the money. This will eventually lead to kill efficiencies. And the military budget around 60% of our country’s entire budget… no way this ends well.