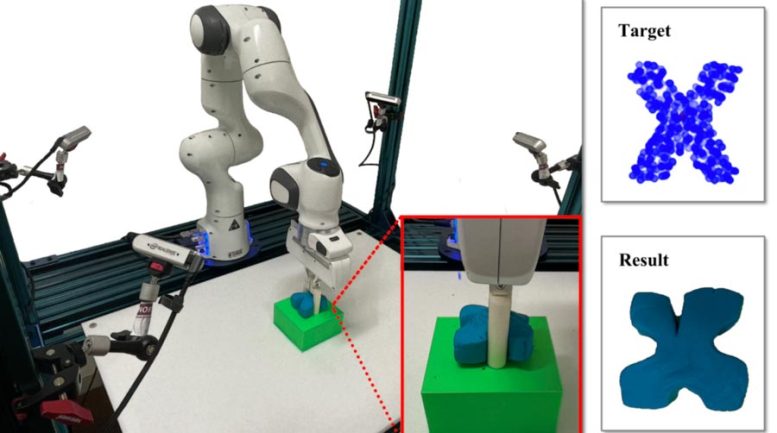

Researchers manipulate elasto-plastic objects into target shapes from visual cues. Credit: MIT CSAIL

Robots manipulate soft, deformable material into various shapes from visual inputs in a new system that could one day enable better home assistants.

Many of us feel an overwhelming sense of joy from our inner child when stumbling across a pile of the fluorescent, rubbery mixture of water, salt, and flour that put goo on the map: play dough. (Even if this rarely happens in adulthood.)

While manipulating play dough is fun and easy for 2-year-olds, the shapeless sludge is quite difficult for robots to handle. With rigid objects, machines have become increasingly reliable, but manipulating soft, deformable objects comes with a laundry list of technical challenges. One of the keys to the difficulty is that, as with most flexible structures, if you move one part, you’re likely affecting everything else.

Recently, scientists from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and Stanford University let robots take their hand at playing with the modeling compound, but not for nostalgia’s sake. Their new system called “RoboCraft” learns directly from visual inputs to let a robot with a two-fingered gripper see, simulate, and shape doughy objects. It could reliably plan a robot’s behavior to pinch and release play dough to make various letters, including ones it had never seen. In fact, with just 10 minutes of data, the two-finger gripper rivaled human counterparts that teleoperated the machine — performing on-par, and at times even better, on the tested tasks.

“Modeling and manipulating objects with high degrees of freedom are essential capabilities for robots to learn how to enable complex industrial and household interaction tasks, like stuffing dumplings, rolling sushi, and making pottery,” says Yunzhu Li, CSAIL PhD student and author of a new paper about RoboCraft. “While there have been recent advances in manipulating clothes and ropes, we found that objects with high plasticity, like dough or plasticine — despite ubiquity in those household and industrial settings — was a largely underexplored territory. With RoboCraft, we learn the dynamics models directly from high-dimensional sensory data, which offers a promising data-driven avenue for us to perform effective planning.”

When working with undefined, smooth materials, the whole structure must be taken into consideration before any form of efficient and effective modeling and planning can be done. RoboCraft uses a graph neural network as the dynamics model and transforms images into graphs of tiny particles together with algorithms to provide more precise predictions about the material’s change in shape.

RoboCraft just employs visual data instead of complicated physics simulators, which researchers often use to model and understand the dynamics and forces acting on objects. Three components work together within the system to form soft material into, say, an “R,” for example.

Perception — the first part of the system — is all about learning to “see.” It employs cameras to gather raw, visual sensor data from the environment, which are then turned into little clouds of particles to represent the shapes. This particle data is used by a graph-based neural network to learn to “simulate” the object’s dynamics, or how it moves. Armed with the training data from many pinches, algorithms then help plan the robot’s behavior so it learns to “shape” a blob of dough. While the letters are a little sloppy, they’re unquestionably representative.

Besides creating cutesy shapes, the team of researchers is (actually) working on making dumplings from dough and a prepared filling. It’s a lot to ask at the moment with only a two-finger gripper. A rolling pin, a stamp, and a mold would be additional tools required by RoboCraft (much as a baker requires various tools to work effectively).

A further in the future domain the scientists envision is using RoboCraft for assistance with household tasks and chores, which could be of particular help to the elderly or those with limited mobility. To accomplish this, given the many obstructions that could take place, a much more adaptive representation of the dough or item would be needed, as well as an exploration into what class of models might be suitable to capture the underlying structural systems.

“RoboCraft essentially demonstrates that this predictive model can be learned in very data-efficient ways to plan motion. In the long run, we are thinking about using various tools to manipulate materials,” says Li. “If you think about dumpling or dough making, just one gripper wouldn’t be able to solve it. Helping the model understand and accomplish longer-horizon planning tasks, such as, how the dough will deform given the current tool, movements, and actions, is a next step for future work.”

Li wrote the paper alongside Haochen Shi, Stanford master’s student; Huazhe Xu, Stanford postdoc; Zhiao Huang, PhD student at the University of California at San Diego; and Jiajun Wu, assistant professor at Stanford. They will present the research at the Robotics: Science and Systems conference in New York City. The work is in part supported by the Stanford Institute for Human-Centered AI (HAI), the Samsung Global Research Outreach (GRO) Program, the Toyota Research Institute (TRI), and Amazon, Autodesk, Salesforce, and Bosch.

Be the first to comment on "Robots Learn To Play With Play Dough – Better Than People With Just 10 Minutes of Data"