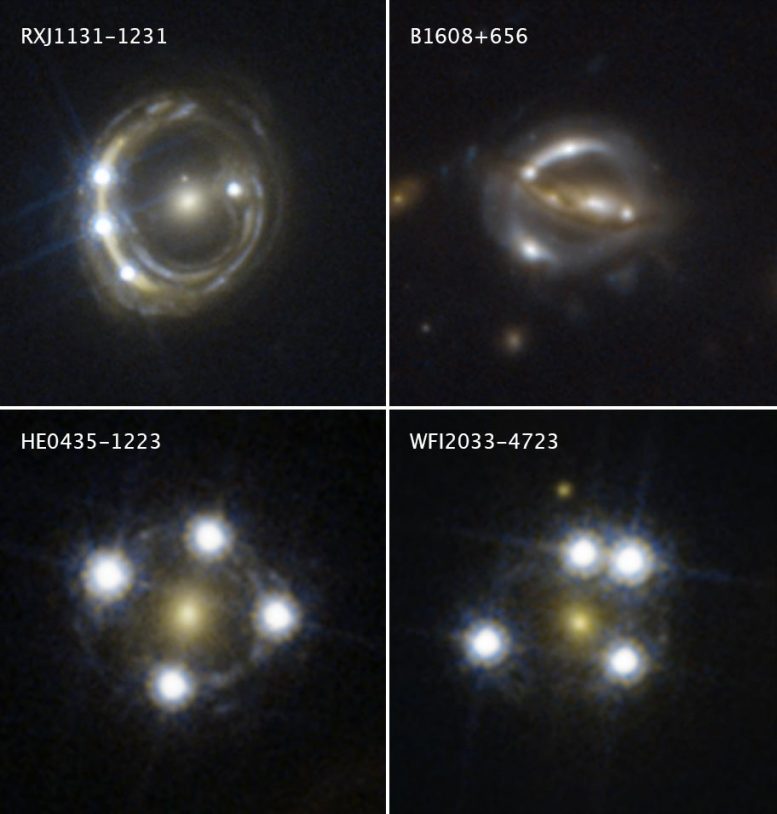

Each of these Hubble Space Telescope snapshots reveals four distorted images of a background quasar surrounding the central core of a foreground massive galaxy.

The multiple quasar images were produced by the gravity of the foreground galaxy, which is acting like a magnifying glass by warping the quasar’s light in an effect called gravitational lensing. Quasars are extremely distant cosmic streetlights produced by active black holes.

The light rays from each lensed quasar image take a slightly different path through space to reach Earth. The pathway’s length depends on the amount of matter that is distorting space along the line of sight to the quasar. To trace each pathway, the astronomers monitor the flickering of the quasar’s light as its black hole gobbles up material. When the light flickers, each lensed image brightens at a different time. This flickering sequence allows researchers to measure the time delays between each image as the lensed light travels along its path to Earth.

These time-delay measurements helped astronomers calculate how fast the universe is growing, a value called the Hubble constant.

The Hubble images were taken between 2003 and 2004 with the Advanced Camera for Surveys.

Credit: NASA, ESA, S.H. Suyu (Max Planck Institute for Astrophysics, Technical University of Munich, and Academia Sinica Institute of Astronomy and Astrophysics), and K.C. Wong (University of Tokyo’s Kavli Institute for the Physics and Mathematics of the Universe)

New Hubble Measurement Strengthens Discrepancy in Universe’s Expansion Rate

People use the phrase “Holy Cow” to express excitement. Playing with that phrase, researchers from an international collaboration developed an acronym—H0LiCOW—for their project’s name that expresses the excitement over their Hubble Space Telescope measurements of the universe’s expansion rate.

Knowing the precise value for how fast the universe expands is important for determining the age, size, and fate of the cosmos. Unraveling this mystery has been one of the greatest challenges in astrophysics in recent years.

Members of the H0LiCOW (H0 Lenses in COSMOGRAIL’s Wellspring) team used Hubble and a technique that is completely independent of any previous method to measure the universe’s expansion, a value called the Hubble constant.

This latest value represents the most precise measurement yet using the gravitational lensing method, where the gravity of a foreground galaxy acts like a giant magnifying lens, amplifying and distorting light from background objects. This latest study did not rely on the traditional “cosmic distance ladder” technique to measure accurate distances to galaxies by using various types of stars as “milepost markers.” Instead, the researchers employed the exotic physics of gravitational lensing to calculate the universe’s expansion rate.

The researchers’ result further strengthens a troubling discrepancy between the expansion rate calculated from measurements of the local universe and the rate as predicted from background radiation in the early universe, a time before galaxies and stars even existed. The new study adds evidence to the idea that new theories may be needed to explain what scientists are finding.

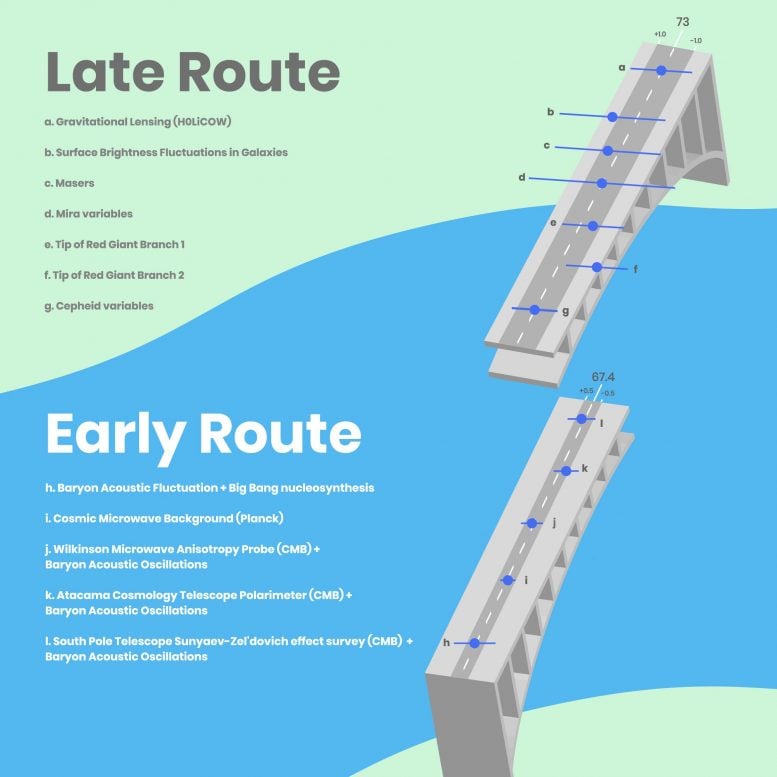

This graphic lists the variety of techniques astronomers have used to measure the expansion rate of the universe, known as the Hubble constant. Knowing the precise value for how fast the universe expands is important for determining the age, size, and fate of the cosmos.

One set of observations looked at the very early universe. Based on those measurements, astronomers calculated a Hubble constant value. A second set of observation strategies analyzed the universe’s expansion in the local universe.

The challenge to cosmologists is that these two approaches don’t arrive at the same value. It’s just as perplexing as two opposite sections of a bridge under construction not lining up. Clearly something is wrong, but what? Astrophysicists may need to rethink their ideas about the physical underpinnings of the observable universe.

The top half of the illustration outlines the seven different methods used to measure the expansion in the local universe. The letters corresponding to each technique are plotted on the bridge on the right. The location of each dot on the bridge road represents the measured value of the Hubble constant, while the length of the associated bar shows the estimated amount of uncertainty in the measurements. The seven methods combined yield an average Hubble constant value of 73 kilometers per second per megaparsec.

This number is at odds with the combined value of the techniques astronomers used to calculate the universe’s expansion rate from the early cosmos (shown in the bottom half of the graphic). However, these five techniques are generally more precise because they have lower estimated uncertainties, as shown in the plot on the bridge road. Their combined value for the Hubble constant is 67.4 kilometers per second per megaparsec.

Credit: NASA, ESA, and A. James (STScI)

A team of astronomers using NASA’s Hubble Space Telescope has measured the universe’s expansion rate using a technique that is completely independent of any previous method.

Knowing the precise value for how fast the universe expands is important for determining the age, size, and fate of the cosmos. Unraveling this mystery has been one of the greatest challenges in astrophysics in recent years. The new study adds evidence to the idea that new theories may be needed to explain what scientists are finding.

The researchers’ result further strengthens a troubling discrepancy between the expansion rate, called the Hubble constant, calculated from measurements of the local universe, and the rate as predicted from background radiation in the early universe, a time before galaxies and stars even existed.

This latest value represents the most precise measurement yet using the gravitational lensing method, where the gravity of a foreground galaxy acts like a giant magnifying lens, amplifying and distorting light from background objects. This latest study did not rely on the traditional “cosmic distance ladder” technique to measure accurate distances to galaxies by using various types of stars as “milepost markers.” Instead, the researchers employed the exotic physics of gravitational lensing to calculate the universe’s expansion rate.

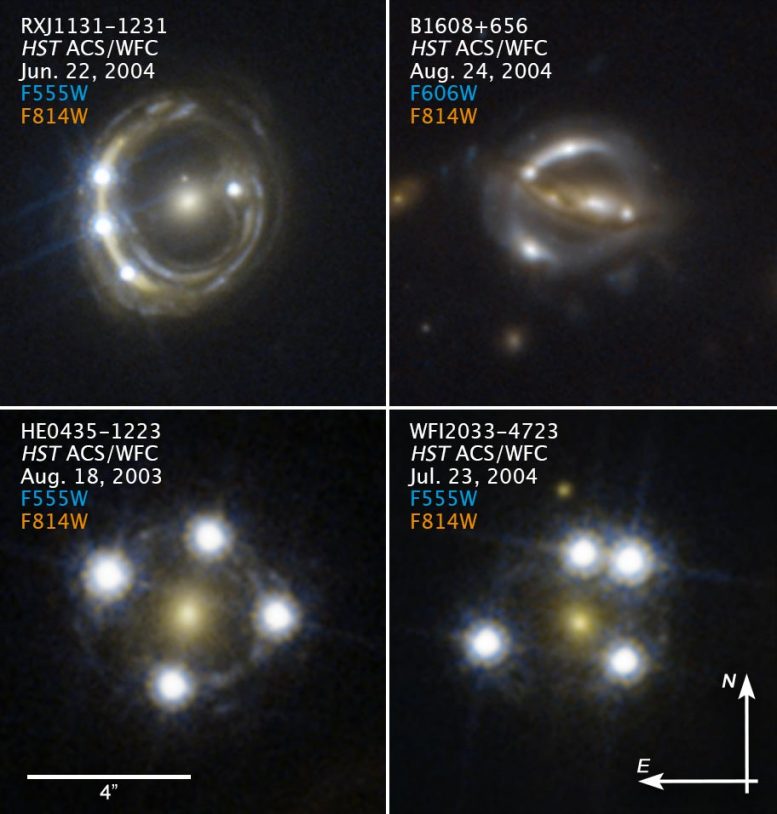

Annotated Compass Image of Gravitationally Lensed Quasars. Credit: NASA, ESA, S.H. Suyu (Max Planck Institute for Astrophysics, Technical University of Munich, and Academia Sinica Institute of Astronomy and Astrophysics), and K.C. Wong (University of Tokyo’s Kavli Institute for the Physics and Mathematics of the Universe)

The astronomy team that made the new Hubble constant measurements is dubbed H0LiCOW (H0 Lenses in COSMOGRAIL’s Wellspring). COSMOGRAIL is the acronym for Cosmological Monitoring of Gravitational Lenses, a large international project whose goal is monitoring gravitational lenses. “Wellspring” refers to the abundant supply of quasar lensing systems.

The research team derived the H0LiCOW value for the Hubble constant through observing and analysis techniques that have been greatly refined over the past two decades.

H0LiCOW and other recent measurements suggest a faster expansion rate in the local universe than was expected based on observations by the European Space Agency’s Planck satellite of how the cosmos behaved more than 13 billion years ago.

The gulf between the two values has important implications for understanding the universe’s underlying physical parameters and may require new physics to account for the mismatch.

“If these results do not agree, it may be a hint that we do not yet fully understand how matter and energy evolved over time, particularly at early times,” said H0LiCOW team leader Sherry Suyu of the Max Planck Institute for Astrophysics in Germany, the Technical University of Munich, and the Academia Sinica Institute of Astronomy and Astrophysics in Taipei, Taiwan.

How they did it

The H0LiCOW team used Hubble to observe the light from six faraway quasars, the brilliant searchlights from gas orbiting supermassive black holes at the centers of galaxies. Quasars are ideal background objects for many reasons; for example, they are bright, extremely distant, and scattered all over the sky. The telescope observed how the light from each quasar was multiplied into four images by the gravity of a massive foreground galaxy. The galaxies studied are 3 billion to 6.5 billion light-years away. The quasars’ average distance is 5.5 billion light-years from Earth.

The light rays from each lensed quasar image take a slightly different path through space to reach Earth. The pathway’s length depends on the amount of matter that is distorting space along the line of sight to the quasar. To trace each pathway, the astronomers monitor the flickering of the quasar’s light as its black hole gobbles up material. When the light flickers, each lensed image brightens at a different time.

This flickering sequence allows researchers to measure the time delays between each image as the lensed light travels along its path to Earth. To fully understand these delays, the team first used Hubble to make accurate maps of the distribution of matter in each lensing galaxy. Astronomers could then reliably deduce the distances from the galaxy to the quasar, from Earth to the galaxy, and to the background quasar. By comparing these distance values, the researchers measured the universe’s expansion rate.

“The length of each time delay indicates how fast the universe is expanding,” said team member Kenneth Wong of the University of Tokyo’s Kavli Institute for the Physics and Mathematics of the Universe, lead author of the H0LiCOW collaboration’s most recent paper. “If the time delays are shorter, then the universe is expanding at a faster rate. If they are longer, then the expansion rate is slower.”

The time-delay process is analogous to four trains leaving the same station at exactly the same time and traveling at the same speed to reach the same destination. However, each of the trains arrives at the destination at a different time. That’s because each train takes a different route, and the distance for each route is not the same. Some trains travel over hills. Others go through valleys, and still others chug around mountains. From the varied arrival times, one can infer that each train traveled a different distance to reach the same stop. Similarly, the quasar flickering pattern does not appear at the same time because some of the light is delayed by traveling around bends created by the gravity of dense matter in the intervening galaxy.

How it compares

The researchers calculated a Hubble constant value of 73 kilometers per second per megaparsec (with 2.4% uncertainty). This means that for every additional 3.3 million light-years away a galaxy is from Earth, it appears to be moving 73 kilometers per second faster, because of the universe’s expansion.

The team’s measurement also is close to the Hubble constant value of 74 calculated by the Supernova H0 for the Equation of State (SH0ES) team, which used the cosmic distance ladder technique. The SH0ES measurement is based on gauging the distances to galaxies near and far from Earth by using Cepheid variable stars and supernovas as measuring sticks to the galaxies.

The SH0ES and H0LiCOW values significantly differ from the Planck number of 67, strengthening the tension between Hubble constant measurements of the modern universe and the predicted value based on observations of the early universe.

“One of the challenges we overcame was having dedicated monitoring programs through COSMOGRAIL to get the time delays for several of these quasar lensing systems,” said Frédéric Courbin of the Ecole Polytechnique Fédérale de Lausanne, leader of the COSMOGRAIL project.

Suyu added: “At the same time, new mass modeling techniques were developed to measure a galaxy’s matter distribution, including models we designed to make use of the high-resolution Hubble imaging. The images enabled us to reconstruct, for example, the quasars’ host galaxies. These images, along with additional wider-field images taken from ground-based telescopes, also allow us to characterize the environment of the lens system, which affects the bending of light rays. The new mass modeling techniques, in combination with the time delays, help us to measure precise distances to the galaxies.”

Begun in 2012, the H0LiCOW team now has Hubble images and time-delay information for 10 lensed quasars and intervening lensing galaxies. The team will continue to search for and follow up on new lensed quasars in collaboration with researchers from two new programs. One program, called STRIDES (STRong-lensing Insights into Dark Energy Survey), is searching for new lensed quasar systems. The second, called SHARP (Strong-lensing at High Angular Resolution Program), uses adaptive optics with the W.M. Keck telescopes to image the lensed systems. The team’s goal is to observe 30 more lensed quasar systems to reduce their 2.4% percent uncertainty to 1%.

NASA’s upcoming James Webb Space Telescope, expected to launch in 2021, may help them achieve their goal of 1% uncertainty much faster through Webb’s ability to map the velocities of stars in a lensing galaxy, which will allow astronomers to develop more precise models of the galaxy’s distribution of dark matter.

The H0LiCOW team’s work also paves the way for studying hundreds of lensed quasars that astronomers are discovering through surveys such as the Dark Energy Survey and PanSTARRS (Panoramic Survey Telescope and Rapid Response System), and the upcoming National Science Foundation’s Large Synoptic Survey Telescope, which is expected to uncover thousands of additional sources.

In addition, NASA’s Wide Field Infrared Survey Telescope (WFIRST) will help astronomers address the disagreement in the Hubble constant value by tracing the expansion history of the universe. The mission will also use multiple techniques, such as sampling thousands of supernovae and other objects at various distances, to help determine whether the discrepancy is a result of measurement errors, observational techniques, or whether astronomers need to adjust the theory from which they derive their predictions.

The team will present its results at the 235th meeting of the American Astronomical Society in Honolulu, Hawaii.

The Hubble Space Telescope is a project of international cooperation between the European Space Agency (ESA) and NASA. NASA’s Goddard Space Flight Center in Greenbelt, Maryland, manages the telescope. The Space Telescope Science Institute (STScI) in Baltimore, Maryland, conducts Hubble science operations. STScI is operated for NASA by the Association of Universities for Research in Astronomy in Washington, D.C.

The Hubble rate value of today is the one outstanding big question in cosmology.

The diagram over measurements presented here is produced by a fellow at the operations center for space telescopes, including the Hubble that H0LiCOW used [ https://en.wikipedia.org/wiki/Space_Telescope_Science_Institute ]. It suits a popular text from NASA but not a press release since it is pointed in suggesting a misfit as well as leaving out the middle range observations. Those can be seen in a similar diagram from ESA [ https://sci.esa.int/web/planck/-/60504-measurements-of-the-hubble-constant ]. (There are more of these than shown in the diagram, such as gravitational waves and the red giant branch tip of the Hertzsprung–Russell diagram.) There are several explanations for something like that, including remaining systematic errors which are unaccounted for.

The history of other measurements may be illuminating on the problems, for example the apparent bias and hugely underestimated uncertainty in early light speed measurements [ https://arxiv.org/pdf/physics/0508199.pdf ; references omitted].

“Franklin terms these time-dependent shifts and trends “bandwagon effects,” and discusses a particularly dramatic case with | eta+- |, the parameter that measures CP violation. As seen in Fig. 1, measurements of | eta+- | before 1973 are systematically different from those after 1973.

Henrion and Fischhoff graphed experimental reports on the speed of light, as a function of the year of the experiment. Their graph (Fig. 2) shows that experimental results tend to cluster around a certain value for many years, and then suddenly jump to cluster around a new value, often many error bars from the previously accepted value. Two such jumps occur for the speed of light. Again, the bias is clearly present in the graph, but the exact mechanism by which it occured is harder to discern.”

“The 1980 Particle Data Group explained [an earlier example] as follows: “A result can disagree with the average of all previous experiments by five standard deviations and still be right! ””

Of course, methods and data coverage improve, but we are also targeting harder problems so the (estimated) uncertainties are about the same. So maybe when we hit a 10 sigma tension range we should start to worry/be happy with that new physics can be the best explanation!?

For the expression of accelerating expansion, consider its time dilation as a difference in the elapsed time measured by two clocks, either due to them having a velocity relative to each other, or by there being a gravitational potential difference between their locations. For gravitational potential differences, similar to a black hole, clocks near a black hole would appear to tick more slowly than those further away from the black hole. Due to this effect, an object falling into a black hole appears to slow as it approaches the event horizon, taking an infinite time to reach it.

Reversing this perspective from a black hole observer, clocks further away from the black hole would appear to tick more quickly than those further away from the black hole. But rather than being governed via a gravitational dilation of time, it would be governed via a dark energy distention of time.

This theoretical hypothesis is being proposed just to theorize upon the concept of time distension as the rational justification for emulating the condition of an increasingly expanding universe. From anywhere in the cosmos, the universe is seemingly expanding faster rather than slower due to the perspective of time distension. Therefore validating the expansion of the universe as an increase of the distance between two distant parts of the universe with time. It is an intrinsic expansion whereby the scale of space itself changes.

The answer is better understood by thinking about gravity a bit differently. In fact, one actually has to reimagine the universe from its perspective make up. Considering the current notion the standard model of cosmology, the current measurements decompose the total energy of the observable universe with 68% dark energy, 27% mass-energy via dark matter, and 5% mass-energy via positive density matter. So, because black holes are significantly more energy dense than ordinary matter, it would then make more sense that black holes are a product of dark matter rather than condensed ordinary matter. Therefore this requires that we rethink these internal relationships for total energy.

Instead of considering the ‘Big Bang’ theory as a one dimensional point as modeled after a gravitational singularity, rather try rethinking of this proposed one dimensional point more of as a determinant of dark energy, or as the pre-existing fabric of space-time without any real matter. Remember that this pre-existent perspective of space-time without any real matter, ordinary or dark, does not readily express a differentiation in space and time; rather it is more of a template for the emergence of matter into a multi-dimensional existence from within its medium of dark energy. Then start unfolding this singular dimensional frame of reference into existence; first into a two dimensional space-time reference, which is an expansion from the one dimensional space-time reference, and then into a three dimensional space-time reference and so on.

As matter is engendered, via whatever theory you may support, the expectation is that this positive density matter creation is taking place within this pre-existing medium of dark energy. Indeed, the emergence of this positive density matter only displaces the pre-existing determinant of dark energy. Take away the positive density matter and you would still have a vessel of dark energy in which the matter once existed. Wherein the creation of positive density matter induces a displacement in the dark energy medium of this evolving space-time fabric, this displacement is not without its own individual properties. In order to maintain the pre-existing determinant of dark energy, the balance of zero is an expectant of the emergent matter. Whereupon this displacement would be the complementary negative density matter upon which the positive density matter can exist. Wherein this negative density matter is known as dark matter, it assimilates as the complement of positive density matter. And its interrelationship would suggest that it provides positive density matter with the ability to interact, bond, and evolve; for without the property of displacement, it would cease to exist.

However, the reverse may not be true. As in the consideration of black holes, the expectant of a condensed mass is restricted to the limitation of what positive density matter really is. Rather, if the dark matter is what insulates ordinary matter from annihilation, then it may be possible for dark matter to exist without ordinary matter. So if black holes are nothing but dark matter, it would also follow that dark matter can be accumulated, separate of positive density matter. It would also follow that the gravitational force is more representative of condensed dark matter than condensed ordinary matter. Upon this hypothesis then, one can expect that there is a require transition to separate positive density matter from its complementary dark matter.

It starts first with the disintegration of matter as it interacts with the event horizon of the black hole. As mass is squeezed upon its own gravitational acceleration toward the black hole, liken to the spaghettification effect, its matter changes to allow for its disintegration via transmutation and the massive release of photons due to alpha decay and beta decay. This is the effect wherein mass is collected within the event horizon, into a plasma, increasing its photon density. The effect is like squeezing out the dark matter from mass, allowing for the positive density matter to be reduced to its smallest constituent components. The dark matter is then absorbed into the black hole, and the remnants of reduced ordinary matter are discarded and radiated out at high velocity back into the cosmos.

If you’re interested in exploring this concept more, please review the alternative theories presented in the book, ‘The Evolutioning of Creation: Volume 2’, or even the ramifications of these concepts in the sci-fi fantasy adventure, ‘Shadow-Forge Revelations’. The theoretical presentation brings forth a variety of alternative perspectives on the aspects of existence that form our reality.

As the article says, “Knowing the precise value for how fast the universe expands is important for determining the age, size, and fate of the cosmos. Unraveling this mystery has been one of the greatest challenges in astrophysics in recent years. The new study adds evidence to the idea that new theories may be needed to explain what scientists are finding.” Perhaps concepts in String Theory will be that new theory.

Saul Perlmutter, Brian Schmidt, and Adam Riess noticed that analysis of light showed that for the past 7 billion years the universe has been expanding faster than it did before, and they speculated that a mysterious Dark Energy was doing it. This work earned them the 2011 Nobel Prize. Billions of research dollars have been spent looking for Dark Energy without a glimmer of success.

Another way to explain Dark Energy is suggested by String Theory. All matter and energy, including photons (light), have vibrating strings as their basis.

String and anti-string pairs are speculated to be created in the quantum foam, a roiling energy field suggested by quantum mechanics, and they immediately annihilate each other. If light passes near these string/anti-string annihilations, perhaps some of that annihilation energy is absorbed by the string in the light. Then the Fraunhofer lines in that light will move a bit towards the blue and away from the red shift. As this continues in an expanding universe we get the same curve displayed by Perlmutter and colleagues at their Nobel Prize lecture, without the need for Dark Energy.

This speculation is contrary to the current direction but has the universe behaving in a much more direct way. Specifics can be found in my YouTube at https://www.youtube.com/watch?v=epk-SMXbu1c

How far have they gone to perpetuate a gravitational model for cosmology based on Einstein’s General Theory of Relativity? Belief in everything from ‘black holes’ to dark energy and the accelerating expansion of the universe has been propagated using Einstein’s theory. Has it become a religion masquerading as science? Einstein claimed that the bending of light passing near the Sun, famously measured by Arthur Eddington during a solar eclipse, and also that the precession of the orbit of Mercury around the Sun were due to space-time deformation as characterized by his theory. In essence, he claimed that the explanation for the phenomena is that the geometry near massive objects is not Euclidean. Einstein said that “in the presence of a gravitational field, the geometry is not Euclidean.” But if that non-Euclidean geometry is self-contradicting, then Einstein’s explanation and his theory cannot be correct. How can it be correct if the title of the Facebook Note, “Einstein’s General Theory of Relativity Is Based on Self-contradicting Non-Euclidean Geometry,” is a true statement? See the Facebook Note at the link:

https://www.facebook.com/notes/reid-barnes/einsteins-general-theory-of-relativity-is-based-on-self-contradicting-non-euclid/1676238042428763/

The answer to universal expansion is simple… it is not expanding as-or from- a singularity. The universe is not a singularly cohesive entity undergoing a uniform phenomenon. Rather, it is a conglomeration of myriad enities that are individually expanding at their own rates, which collectively contribute to the overall evidential phenomenon of cosmological expansion. Like a balloon full of balloons where each individual balloon within expands at a rate determined by the properties of its own contents, yet due to the vast number of individual balloons mostly experiencing expansion (and I’m quite sure some possibly contracting), the entirety (as far as we can yet ascertain) is seen to expand.

We should therefore abandon any notion of a Hubble Constant and its mythical “Big Bang” driver and instead look to a more localised spacial environment for answers to the expansion of the universe. The evidence says so!

We need to measure the same objects using multiple methods, because perhaps the universe is not expanding uniformly.

Why universe is expanding. This problem is answer in 3rd chapter of paper

http://vixra.org/abs/1912.0171

Lightspeed over time is in fact not constant. Do keep that in mind.

I am an information scientist who has studied ancient Indian myths. According to the ancient sages, the age of the Universe is 13.819 billion years. Since the Planck value for the age of the Universe is within 0.13% of the sages’ value, it seems that the Planck team is right about the Hubble constant. You are welcome to read my work at HereticScience.com